[Avg. reading time: 0 minutes]

[Avg. reading time: 2 minutes]

Disclaimer

I want you to know these things.

- First, you are not behind, you are learning on schedule.

- Second, feeling like an imposter is normal, it means you are stretching your skills.

- Third, ignore the online noise. Learning is simple: learn something, think about it, practice it, repeat.

- Lastly, tools will change, but your ability to learn will stay.

Certificates are good, but projects and understanding matter more. Ask questions, help each other, and don’t do this journey alone.

[Avg. reading time: 4 minutes]

Required Tools

Install these softwares before Week 2.

Windows

-

After installing, reopen the terminal and try this from Command prompt.

curl https://curl.se/info

Mac

Common Tools

[Avg. reading time: 3 minutes]

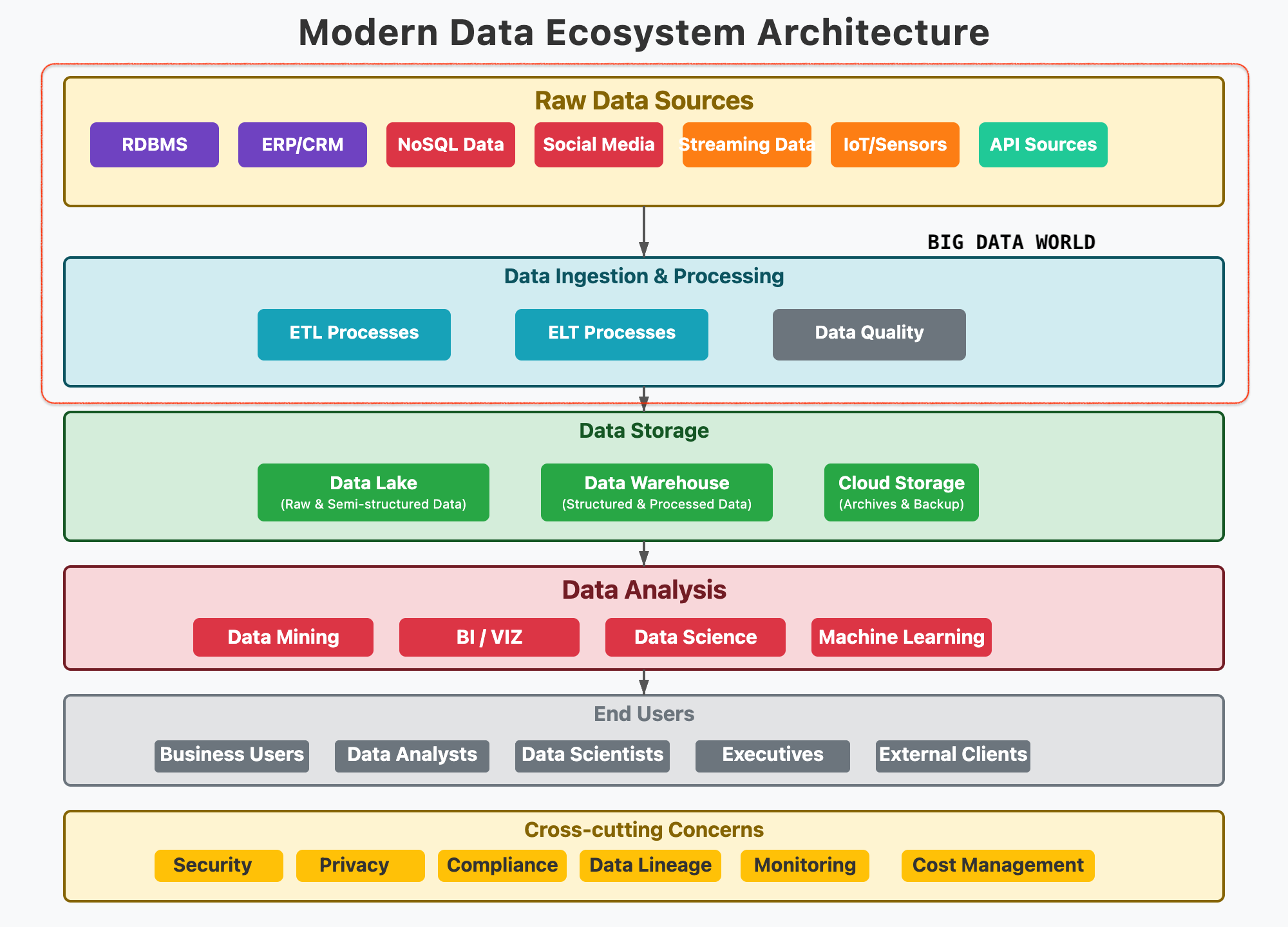

Big Data Overview

- Introduction

- Job Opportunities

- What is Data?

- How does it help?

- Types of Data

- The Big V’s

- Trending Technologies

- Big Data Concerns

- Big Data Challenges

- Data Integration

- Scaling

- Cap Theorem

- Optimistic Concurrency

- Eventual Consistency

- Concurrent vs Parallel

- GPL

- DSL

- Big Data Tools

- NO Sql Databases

- Learning Big Data means?

#introduction #bigdata #chapter1

[Avg. reading time: 2 minutes]

Understanding the Big Data Landscape

Expectation in this course

The first set of questions, which everyone is curious to know.

What is Big Data?

When does the data become Big Data?

Why collect so much Data?

How secure is Big Data?

How does it help?

Where can it be stored?

Which Tools are used to handle Big Data?

The second set of questions to get in deep.

What should I learn?

Does certification help?

Which technology is the best?

How many tools do I need to learn?

Apart from the top 50 corporations, do other companies use Big Data?

[Avg. reading time: 3 minutes]

Job Opportunities

| Role | On-Prem | Big Data Specific | Cloud |

|---|---|---|---|

| Database Developer | ✅ | ✅ | ✅ |

| Data Engineer | ✅ | ✅ | ✅ |

| Database Administrator | ✅ | ✅ | ✅ |

| Data Architect | ✅ | ✅ | ✅ |

| Database Security Eng. | ✅ | ✅ | ✅ |

| Database Manager | ✅ | ✅ | ✅ |

| Data Analyst | ✅ | ✅ | ✅ |

| Business Intelligence | ✅ | ✅ | ✅ |

Database Developer: Designs and writes efficient queries, procedures, and data models for structured databases.

Data Engineer: Builds and maintains scalable data pipelines and ETL processes for large-scale data movement and transformation.

Database Administrator (DBA): Manages and optimizes database systems, ensuring performance, security, and backups.

Data Architect: Defines high-level data strategy and architecture, ensuring alignment with business and technical needs.

Database Security Engineer: Implements and monitors security controls to protect data assets from unauthorized access and breaches.

Database Manager: Oversees database teams and operations, aligning database strategy with organizational goals.

Data Analyst: Interprets data using statistical tools to generate actionable insights for decision-makers.

Business Intelligence (BI) Developer: Creates dashboards, reports, and visualizations to help stakeholders understand data trends and KPIs.

All small to enterprise organizations use Big data to develop their business.

[Avg. reading time: 4 minutes]

What is Data?

Data is simply facts and figures. When processed and contextualized, data becomes information.

Everything is data

- What we say

- Where we go

- What we do

How to measure data?

byte - 1 letter

1 Kilobyte - 1024 B

1 Megabyte - 1024 KB

1 Gigabyte - 1024 MB

1 Terabyte - 1024 GB

(1,099,511,627,776 Bytes)

1 Petabyte - 1024 TB

1 Exabyte - 1024 PB

1 Zettabyte - 1024 EB

1 Yottabyte - 1024 ZB

Examples of Traditional Data

- Banking Records

- Student Information

- Employee Profiles

- Customer Details

- Sales Transactions

When Data becomes Big Data?

When data expands

- Banking: One bank branch vs. global consolidation (e.g., CitiBank)

- Education: One college vs. nationwide student data (e.g., US News)

- Media: Traditional news vs. user-generated content on Social Media

When data gets granular

- Monitoring CPU/Memory usage every second

- Cell phone location & usage logs

- IoT sensor telemetry (temperature, humidity, etc.)

- Social media posts, reactions, likes

- Live traffic data from vehicles and sensors

These fine-grained data points fuel powerful analytics and real-time insights.

Why Collect So Much Data?

- Storage is cheap and abundant

- Tech has advanced to process massive data efficiently

- Businesses use data to innovate, predict trends, and grow

#data #bigdata #traditionaldata

[Avg. reading time: 3 minutes]

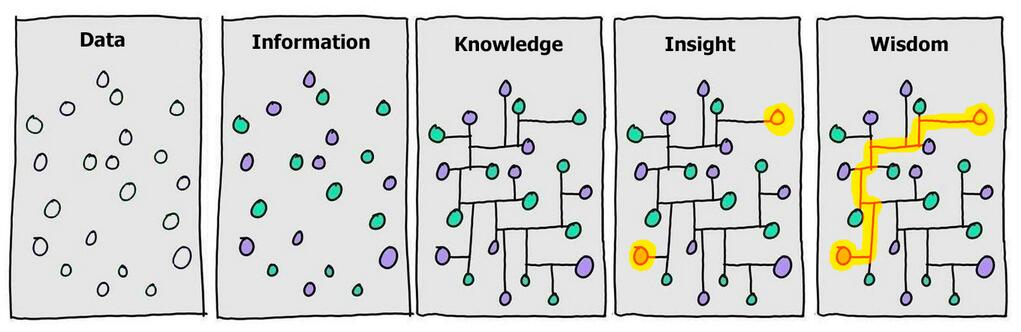

How Big Data helps us

From raw blocks to building knowledge, Big Data drives global progress.

Stages

- Data → scattered observations

- Information → contextualized

- Knowledge → structured relationships

- Insight → patterns emerge

- Wisdom → actionable strategy

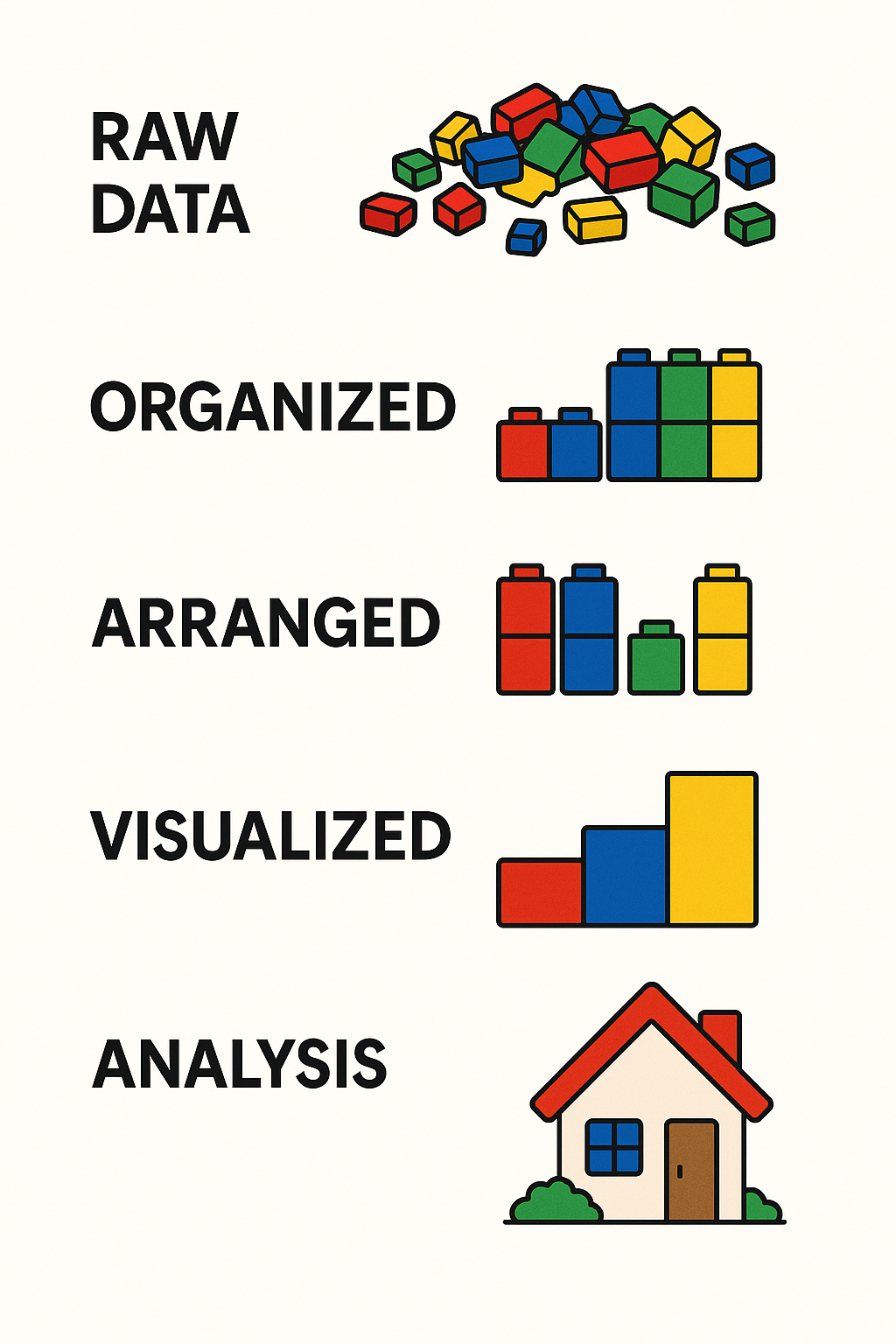

Raw Data to Analysis

Stages

- Raw Data – Messy, unprocessed

- Organized – Grouped by category

- Arranged – Structured to show comparisons

- Visualized – Charts or graphs

- Analysis – Final understanding or solution

Big Data Applications: Changing the World

Here are some real-world domains where Big Data is making a difference:

- Healthcare – Diagnose diseases earlier and personalize treatment

- Agriculture – Predict crop yield and detect pest outbreaks

- Space Exploration – Analyze signals from space and optimize missions

- Disaster Management – Forecast earthquakes, floods, and storms

- Crime Prevention – Predict and detect crime patterns

- IoT & Smart Devices – Real-time decision making in smart homes, vehicles, and cities

#bigdata #rawdata #knowledge #analysis

[Avg. reading time: 7 minutes]

Types of Data

Understanding the types of data is key to processing and analyzing it effectively. Broadly, data falls into two main categories: Quantitative and Qualitative.

Quantitative Data

Quantitative data deals with numbers and measurable forms. It can be further classified as Discrete or Continuous.

- Measurable values (e.g., memory usage, CPU usage, number of likes, shares, retweets)

- Collected from the real world

- Usually close-ended

Discrete

- Represented by whole numbers

- Countable and finite

Example:

- Number of cameras in a phone

- Memory size in GB

Qualitative Data

Qualitative data describes qualities or characteristics that can’t be easily measured numerically.

- Descriptive or abstract

- Can come from text, audio, or images

- Collected via interviews, surveys, or observations

- Usually open-ended

Examples

- Gender: Male, Female, Non-Binary, etc.

- Smartphones: iPhone, Pixel, Motorola, etc.

Nominal

Categorical data without any intrinsic order

Examples:

- Red, Blue, Green

- Types of fruits: Apple, Banana, Mango

Can you rank them logically? No — that’s what makes them nominal.

graph TD A[Types of Data] A --> B[Quantitative] A --> C[Qualitative] B --> B1[Discrete] B --> B2[Continuous] C --> C1[Nominal] C --> C2[Ordinal]

| Category | Subtype | Description | Examples |

|---|---|---|---|

| Quantitative | Discrete | Whole numbers, countable | Number of phones, number of users |

| Continuous | Measurable, can take fractional values | Temperature, CPU usage | |

| Qualitative | Nominal | Categorical with no natural order | Gender, Colors (Red, Blue, Green) |

| Ordinal | Categorical with a meaningful order | T-shirt sizes (S, M, L), Grades (A, B, C…) |

Abstract Understanding

Some qualitative data comes from non-traditional sources like:

- Conversations

- Audio or video files

- Observations or open-text survey responses

This type of data often requires interpretation before it’s usable in models or analysis.

#quantitative #qualitative #discrete #continuous #nominal #ordinal

[Avg. reading time: 1 minute]

The Big V’s of Big Data

[Avg. reading time: 7 minutes]

Variety

Variety refers to the different types, formats, and sources of data collected — one of the 5 Vs of Big Data.

Types of Data : By Source

- Social Media: YouTube, Facebook, LinkedIn, Twitter, Instagram

- IoT Devices: Sensors, Cameras, Smart Meters, Wearables

- Finance/Markets: Stock Market, Cryptocurrency, Financial APIs

- Smart Systems: Smart Cars, Smart TVs, Home Automation

- Enterprise Systems: ERP, CRM, SCM Logs

- Public Data: Government Open Data, Weather Stations

Types of Data : By Data format

- Structured Data – Organized in rows and columns (e.g., CSV, Excel, RDBMS)

- Semi-Structured Data – Self-describing but irregular (e.g., JSON, XML, Avro, YAML)

- Unstructured Data – No fixed schema (e.g., images, audio, video, emails)

- Binary Data – Encoded, compressed, or serialized data (e.g., Parquet, Protocol Buffers, images, MP3)

Generally unstructured data files are stored in binary format, Example: Images, Video, Audio

But not all binary files contain unstructured data. Example: Parquet, Executable.

Structured Data

Tabular data from databases, spreadsheets.

Example:

- Relational Table

- Excel

| ID | Name | Join Date |

|---|---|---|

| 101 | Rachel Green | 2020-05-01 |

| 201 | Joey Tribianni | 1998-07-05 |

| 301 | Monica Geller | 1999-12-14 |

| 401 | Cosmo Kramer | 2001-06-05 |

Semi-Structred Data

Data with tags or markers but not strictly tabular.

JSON

[

{

"id":1,

"name":"Rachel Green",

"gender":"F",

"series":"Friends"

},

{

"id":"2",

"name":"Sheldon Cooper",

"gender":"M",

"series":"BBT"

}

]

XML

<?xml version="1.0" encoding="UTF-8"?>

<actors>

<actor>

<id>1</id>

<name>Rachel Green</name>

<gender>F</gender>

<series>Friends</series>

</actor>

<actor>

<id>2</id>

<name>Sheldon Cooper</name>

<gender>M</gender>

<series>BBT</series>

</actor>

</actors>

Unstructured Data

Media files, free text, documents, logs – no predefined structure.

Rachel Green acted in Friends series. Her role is very popular.

Similarly Sheldon Cooper acted in BBT. He acted as nerd physicist.

Types:

- Images (JPG, PNG)

- Video (MP4, AVI)

- Audio (MP3, WAV)

- Documents (PDF, DOCX)

- Emails

- Logs (system logs, server logs)

- Web scraping content (HTML, raw text)

Note: Now we have lot of LLM (AI tools) that helps us parse Unstructured Data into tabular data quickly.

#structured #unstructured #semistructured #binary #json #xml #image #bigdata #bigv

[Avg. reading time: 4 minutes]

Volume

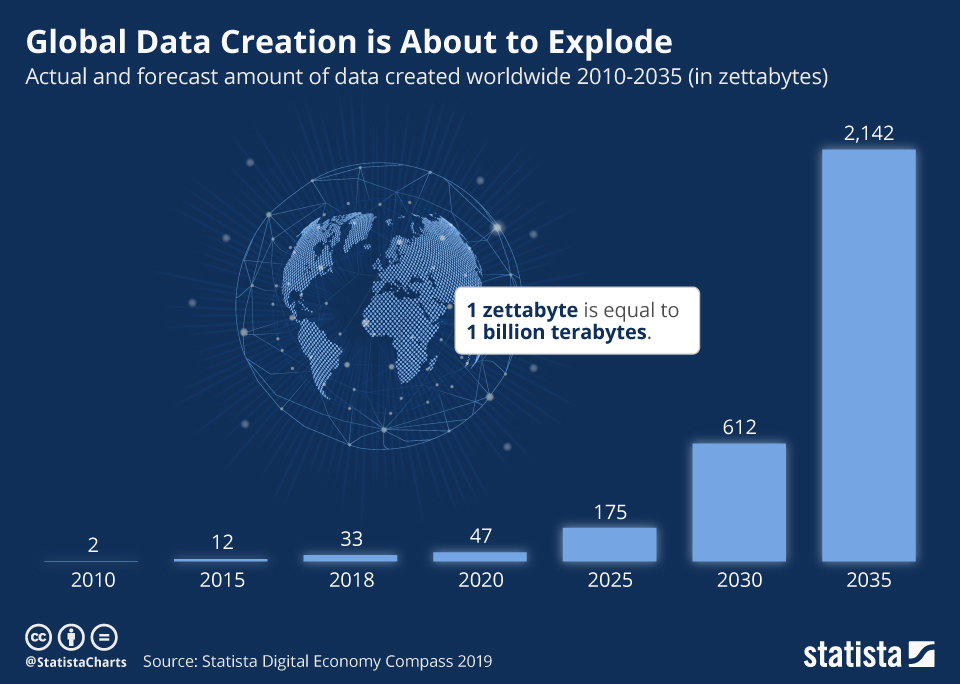

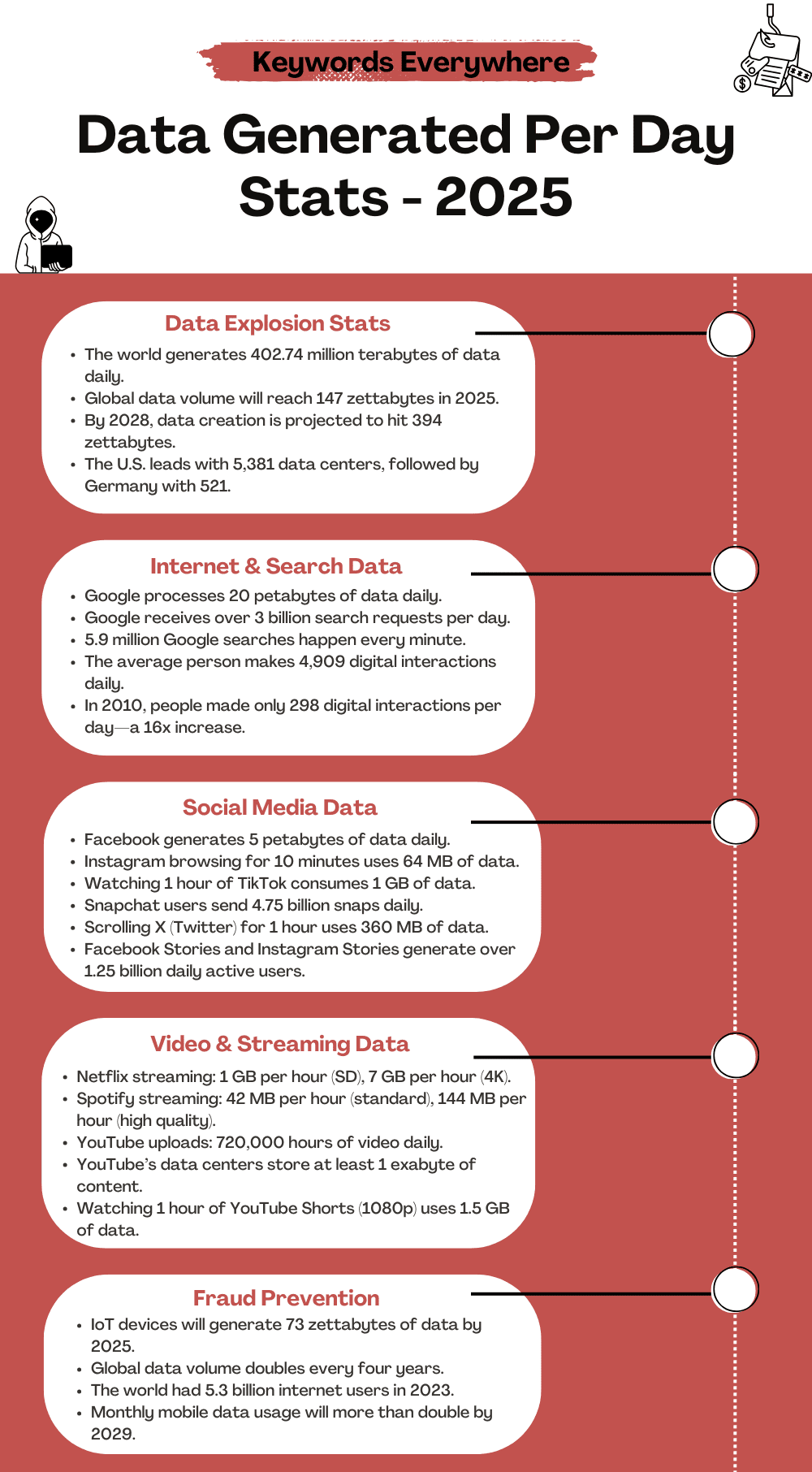

Volume refers to the sheer amount of data generated every second from various sources around the world. It’s one of the core characteristics that makes data big.With the rise of the internet, smartphones, IoT devices, social media, and digital services, the amount of data being produced has reached zettabyte and soon yottabyte scales.

- YouTube users upload 500+ hours of video every minute.

- Facebook generates 4 petabytes of data per day.

- A single connected car can produce 25 GB of data per hour.

- Enterprises generate terabytes to petabytes of log, transaction, and sensor data daily.

Why It Matters

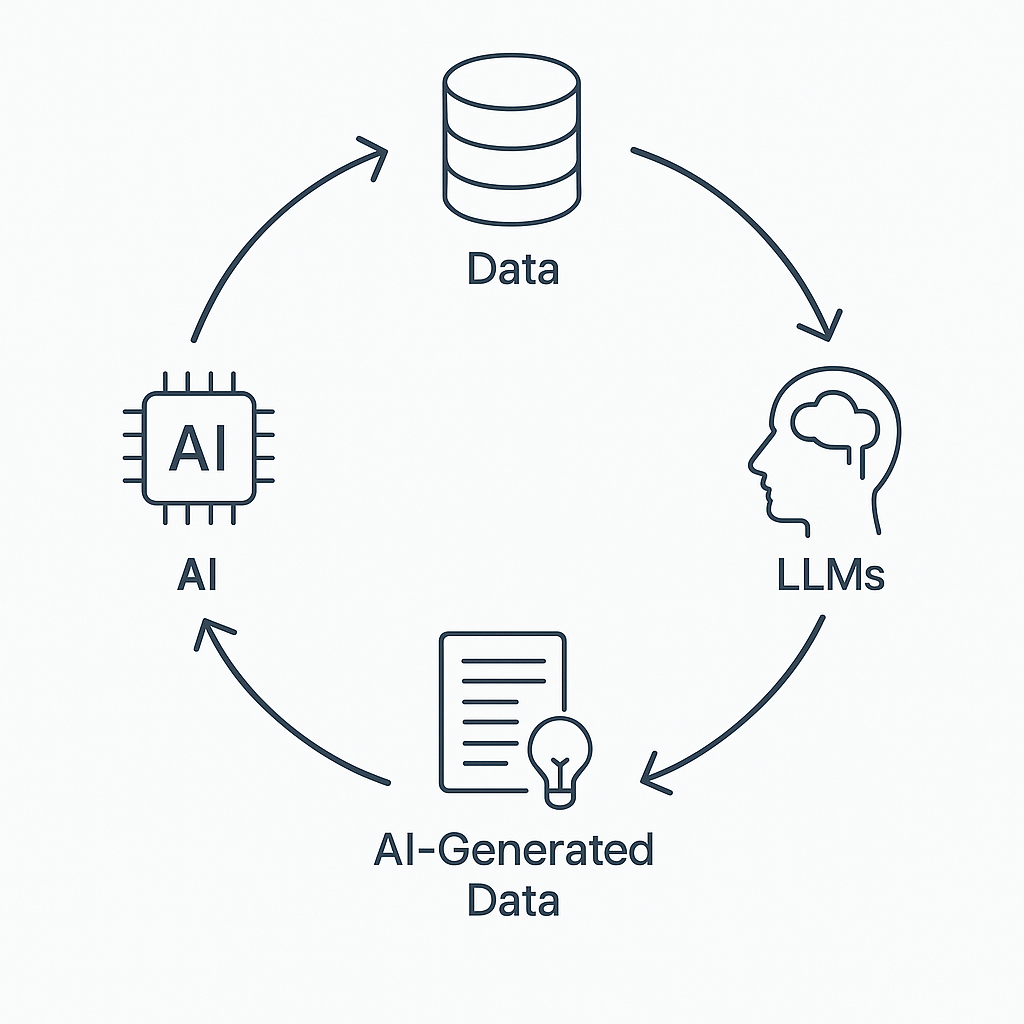

With the rise of Artificial Intelligence (AI) and especially Large Language Models (LLMs) like ChatGPT, Bard, and Claude, the volume of data being generated, consumed, and required for training is skyrocketing.

-

LLMs Need Massive Training Data

-

LLMs generated content is exponential — blogs, reports, summaries, images, audio, and even code.

-

Storage systems must scale horizontally to handle petabytes or more.

-

Traditional databases can’t manage this scale efficiently.

-

Volume impacts data ingestion, processing speed, query performance, and cost.

-

It influences how data is partitioned, replicated, and compressed in distributed systems.

[Avg. reading time: 4 minutes]

Velocity

Velocity refers to the speed at which data is generated, transmitted, and processed. In the era of Big Data, it’s not just about handling large volumes of data, but also about managing the continuous and rapid flow of data in real-time or near real-time.

High-velocity data comes from various sources such as:

- Social Media Platforms: Tweets, posts, likes, and shares occurring every second.

- Sensor Networks: IoT devices transmitting data continuously.

- Financial Markets: Real-time transaction data and stock price updates.

- Online Streaming Services: Continuous streaming of audio and video content.

- E-commerce Platforms: Real-time tracking of user interactions and transactions.

Managing this velocity requires systems capable of:

- Real-Time Data Processing: Immediate analysis and response to incoming data.

- Scalability: Handling increasing data speeds without performance degradation.

- Low Latency: Minimizing delays in data processing and response times.

Source1

Source1

1: https://keywordseverywhere.com/blog/data-generated-per-day-stats/

[Avg. reading time: 7 minutes]

Veracity

Veracity refers to the trustworthiness, quality, and accuracy of data. In the world of Big Data, not all data is created equal — some may be incomplete, inconsistent, outdated, or even deliberately false. The challenge is not just collecting data, but ensuring it’s reliable enough to make sound decisions.

Why Veracity Matters

-

Poor data quality can lead to wrong insights, flawed models, and bad business decisions.

-

With increasing sources (social media, sensors, web scraping), there’s more noise than ever.

-

Real-world data often comes with missing values, duplicates, biases, or outliers.

Key Dimensions of Veracity in Big Data

| Dimension | Description | Example |

|---|---|---|

| Trustworthiness | Confidence in the accuracy and authenticity of data. | Verifying customer feedback vs. bot reviews |

| Origin | The source of the data and its lineage or traceability. | Knowing if weather data comes from reliable source |

| Completeness | Whether the dataset has all required fields and values. | Missing values in patient health records |

| Integrity | Ensuring the data hasn’t been altered, corrupted, or tampered with during storage or transfer. | Using checksums to validate data blocks |

How to Tackle Veracity Issues

- Data Cleaning: Remove duplicates, correct errors, fill missing values.

- Validation & Verification: Check consistency across sources.

- Data Provenance: Track where the data came from and how it was transformed.

- Bias Detection: Identify and reduce systemic bias in training datasets.

- Robust Models: Build models that can tolerate and adapt to noisy inputs.

Websites & Tools to Generate Sample Data

Highly customizable fake data generator; supports exporting as CSV, JSON, SQL. https://mockaroo.com

Easy UI to create datasets with custom fields like names, dates, numbers, etc. https://www.onlinedatagenerator.com

Apart from this, there are few Data generating libraries.

https://faker.readthedocs.io/en/master/

https://github.com/databrickslabs/dbldatagen

Question?

Is generating fake data good or bad?

When we have real data? why generate fake data?

[Avg. reading time: 3 minutes]

Other V’s in Big Data

| Other V’s | Meaning | Key Question / Use Case |

|---|---|---|

| Value | Business/Customer Impact | What value does this data bring to the business or end users? |

| Visualization | Data Representation | Can the data be visualized clearly to aid understanding and decisions? |

| Viability | Production/Sustainability | Is it viable to operationalize and sustain this data in production systems? |

| Virality | Shareability/Impact | Will the message or insight be effective when shared across channels (e.g., social media)? |

| Version | Data Versioning | Do we need to maintain different versions? Is the cost of versioning justified? |

| Validity | Time-Sensitivity | How long is the data relevant? Will its meaning or utility change over time? |

Example

-

Validity: Zoom usage data from 2020 was valid during lockdown, can that be used for benchmarking?

-

Virality: A meme might go viral on Instagram and not received well in Twitter or LinkedIn.

-

Version: For some master records, we might need versioned data. For simple web traffic counts, maybe not.

#bigdata #otherv #value #version #validity

[Avg. reading time: 7 minutes]

Trending Technologies

Powered by Big Data

Big Data isn’t just about storing and processing huge volumes of information — it’s the engine that drives modern innovation. From healthcare to self-driving cars, Big Data plays a critical role in shaping the technologies we use and depend on every day.

Where Big Data Is Making an Impact

-

Robotics

Enhances learning and adaptive behavior in robots by feeding real-time and historical data into control algorithms. -

Artificial Intelligence (AI)

The heart of AI — machine learning models rely on Big Data to train, fine-tune, and make accurate predictions. -

Internet of Things (IoT)

Millions of devices — from smart thermostats to industrial sensors — generate data every second. Big Data platforms analyze this for real-time insights. -

Internet & Mobile Apps

Collect user behavior data to power personalization, recommendations, and user experience optimization. -

Autonomous Cars & VANETs (Vehicular Networks)

Use sensor and network data for route planning, obstacle avoidance, and decision-making. -

Wireless Networks & 5G

Big Data helps optimize network traffic, reduce latency, and predict service outages before they occur. -

Voice Assistants (Siri, Alexa, Google Assistant)

Depend on Big Data and NLP models to understand speech, learn preferences, and respond intelligently. -

Cybersecurity

Uses pattern detection on massive datasets to identify anomalies, prevent attacks, and detect fraud in real time. -

Bioinformatics & Genomics

Big Data helps decode genetic sequences, enabling personalized medicine and new drug discoveries. Big Data was a game-changer in the development and distribution of COVID-19 vaccineshttps://pmc.ncbi.nlm.nih.gov/articles/PMC9236915/

-

Renewable Energy

Analyzes weather, consumption, and device data to maximize efficiency in solar, wind, and other green technologies. -

Neural Networks & Deep Learning

These advanced AI models require large-scale labeled data for training complex tasks like image recognition or language translation.

Broad Use Areas for Big Data

| Area | Description |

|---|---|

| Data Mining & Analytics | Finding patterns and insights from raw data |

| Data Visualization | Presenting data in a human-friendly, understandable format |

| Machine Learning | Training models that learn from historical data |

#bigdata #technologies #iot #ai #robotics

[Avg. reading time: 6 minutes]

Big Data Concerns

Big Data brings massive potential, but it also introduces ethical, technical, and societal challenges. Below is a categorized view of key concerns and how they can be mitigated.

Privacy, Security & Governance

Concerns

- Privacy: Risk of misuse of sensitive personal data.

- Security: Exposure to cyberattacks and data breaches.

- Governance: Lack of clarity on data ownership and access rights.

Mitigation

- Use strong encryption, anonymization, and secure access controls.

- Conduct regular security audits and staff awareness training.

- Define and enforce data governance policies on ownership, access, and lifecycle.

- Establish consent mechanisms and transparent data usage policies.

Data Quality, Accuracy & Interpretation

Concerns

- Inaccurate, incomplete, or outdated data may lead to incorrect decisions.

- Misinterpretation due to lack of context or domain understanding.

Mitigation

- Implement data cleaning, validation, and monitoring procedures.

- Train analysts to understand data context.

- Use cross-functional teams for balanced analysis.

- Maintain data lineage and proper documentation.

Ethics, Fairness & Bias

Concerns

- Potential for discrimination or unethical use of data.

- Over-reliance on algorithms may overlook human factors.

Mitigation

- Develop and follow ethical guidelines for data usage.

- Perform bias audits and impact assessments regularly.

- Combine data-driven insights with human judgment.

Regulatory Compliance

Concerns

- Complexity of complying with regulations like GDPR, HIPAA, etc.

Mitigation

- Stay current with relevant data protection laws.

- Assign a Data Protection Officer (DPO) to ensure ongoing compliance and oversight.

Environmental and Social Impact

Concerns

- High energy usage of data centers contributes to carbon emissions.

- Digital divide may widen gaps between those who can access Big Data and those who cannot.

Mitigation

- Use energy-efficient infrastructure and renewable energy sources.

- Support data literacy, open data access, and inclusive education initiatives.

#bigdata #concerns #mitigation

[Avg. reading time: 9 minutes]

Big Data Challenges

As organizations adopt Big Data, they face several challenges — technical, organizational, financial, legal, and ethical. Below is a categorized overview of these challenges along with effective mitigation strategies.

1. Data Storage & Management

Challenge:

Efficiently storing and managing ever-growing volumes of structured, semi-structured, and unstructured data.

Mitigation:

- Use scalable cloud storage and distributed file systems like HDFS or Delta Lake.

- Establish data lifecycle policies, retention rules, and metadata catalogs for better management.

2. Data Processing & Real-Time Analytics

Challenges:

- Processing huge datasets with speed and accuracy.

- Delivering real-time insights for time-sensitive decisions.

Mitigation:

- Leverage tools like Apache Spark, Flink, and Hadoop for distributed processing.

- Use streaming platforms like Kafka or Spark Streaming.

- Apply parallel and in-memory processing where possible.

3. Data Integration & Interoperability

Challenge:

Bringing together data from diverse sources, formats, and systems into a unified view.

Mitigation:

- Implement ETL/ELT pipelines, data lakes, and integration frameworks.

- Apply data transformation and standardization best practices.

4. Privacy, Security & Compliance

Challenges:

- Preventing data breaches and unauthorized access.

- Adhering to global and regional data regulations (e.g., GDPR, HIPAA, CCPA).

Mitigation:

- Use encryption, role-based access controls, and audit logging.

- Conduct regular security assessments and appoint a Data Protection Officer (DPO).

- Stay current with evolving regulations and enforce compliance frameworks.

5. Data Quality & Trustworthiness

Challenge:

Ensuring that data is accurate, consistent, timely, and complete.

Mitigation:

- Use data validation, cleansing tools, and automated quality checks.

- Monitor for data drift and inconsistencies in real time.

- Maintain data provenance for traceability.

6. Skill Gaps & Talent Shortage

Challenge:

A lack of professionals skilled in Big Data technologies, analytics, and data engineering.

Mitigation:

- Invest in upskilling programs, certifications, and academic partnerships.

- Foster a culture of continuous learning and data literacy across roles.

7. Cost & Resource Management

Challenge:

Managing the high costs associated with storing, processing, and analyzing large-scale data.

Mitigation:

- Optimize workloads using cloud-native autoscaling and resource tagging.

- Use open-source tools where possible.

- Monitor and forecast data usage to control spending.

8. Scalability & Performance

Challenge:

Keeping up with growing data volumes and system demands without compromising performance.

Mitigation:

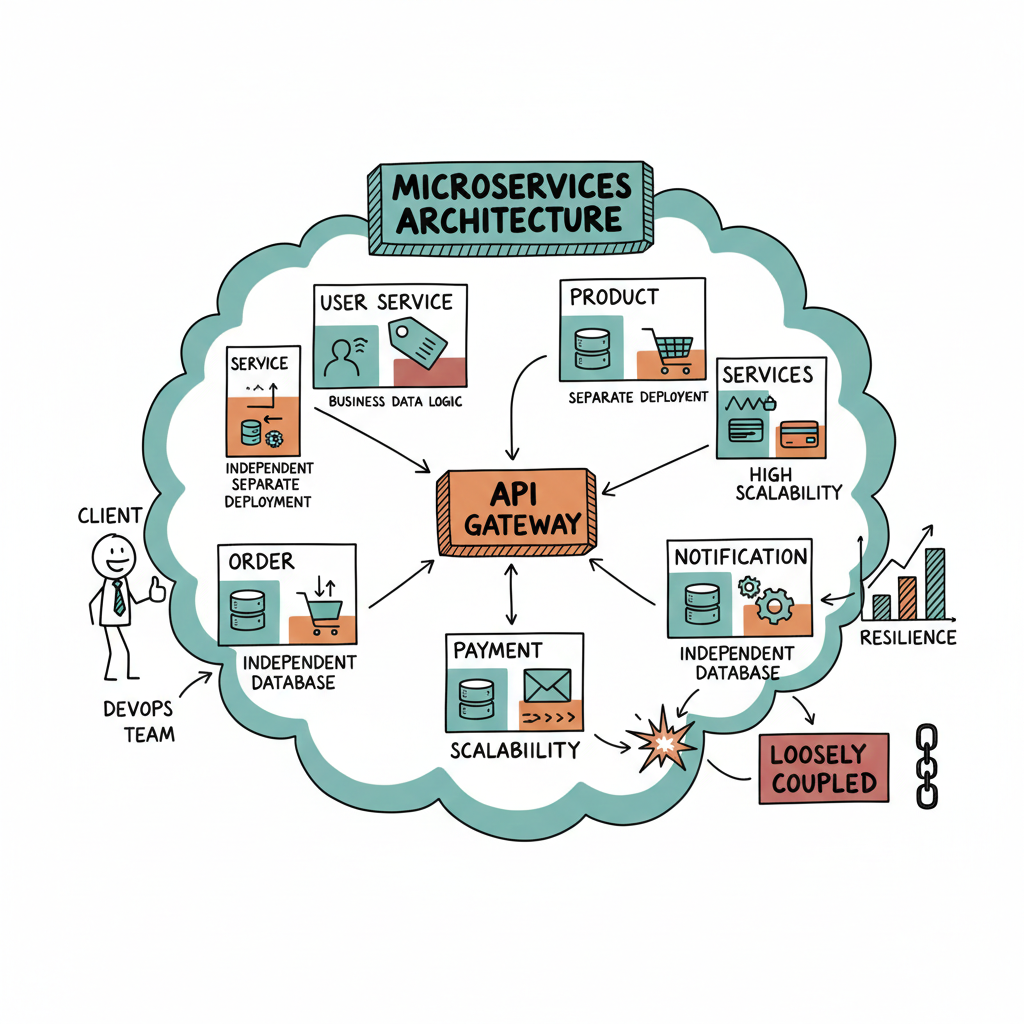

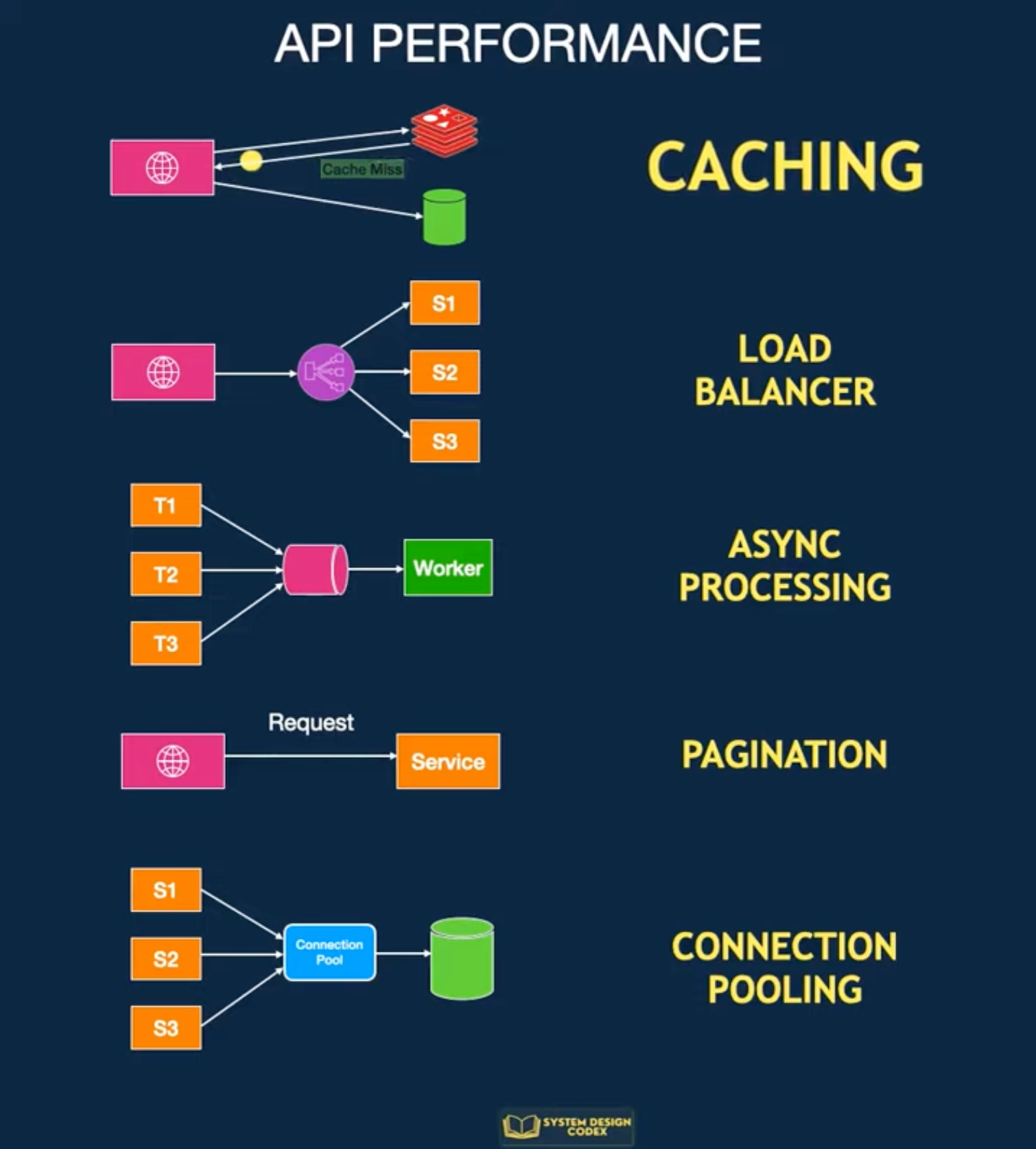

- Design for horizontal scalability using microservices and cloud-native infrastructure.

- Implement load balancing, data partitioning, and caching strategies.

9. Ethics, Governance & Transparency

Challenges:

- Managing bias, fairness, and responsible data usage.

- Ensuring transparency in algorithms and decisions.

Mitigation:

- Establish data ethics policies and review boards.

- Perform regular audits and impact assessments.

- Clearly communicate how data is collected, stored, and used.

#bigdata #ethics #storage #realtime #interoperability #privacy #dataquality

[Avg. reading time: 9 minutes]

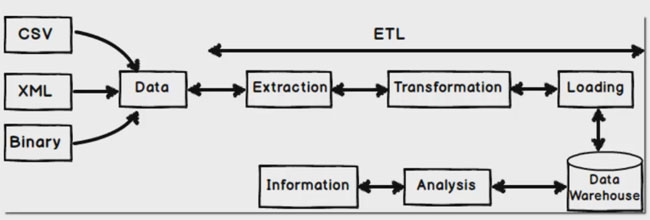

Data Integration

Data integration in the Big Data ecosystem differs significantly from traditional Relational Database Management Systems (RDBMS). While traditional systems rely on structured, predefined workflows, Big Data emphasizes scalability, flexibility, and performance.

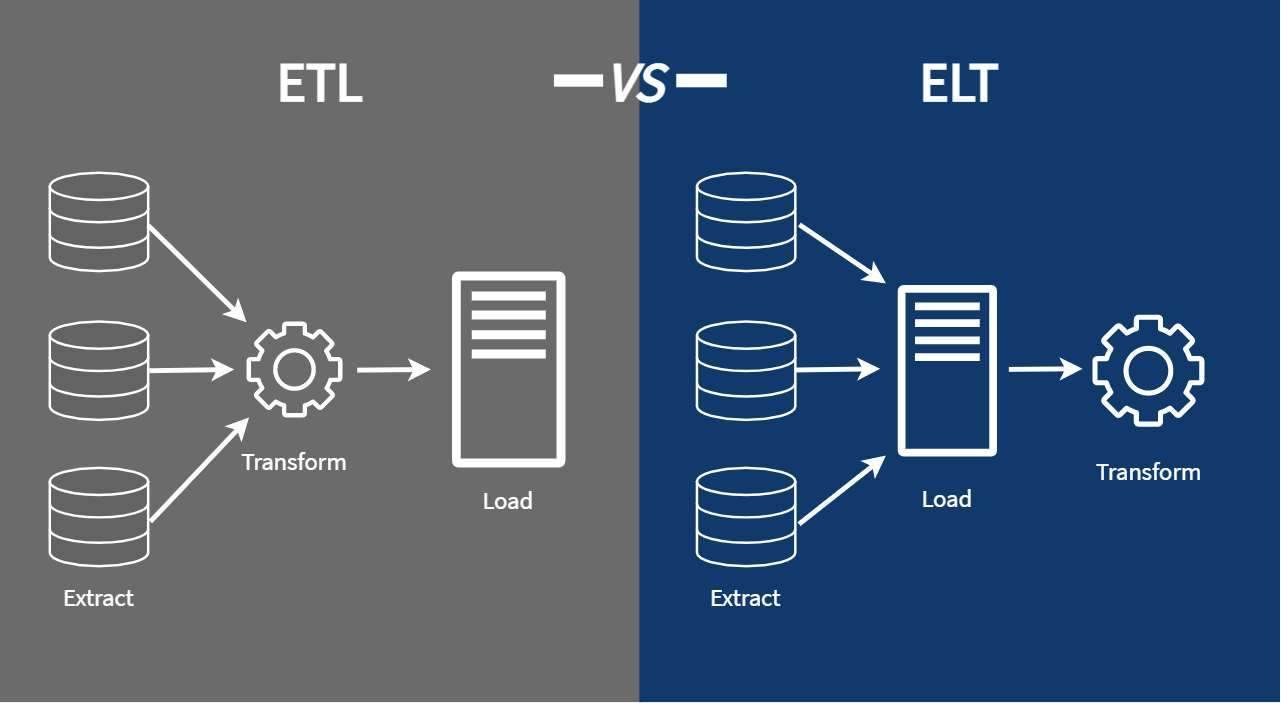

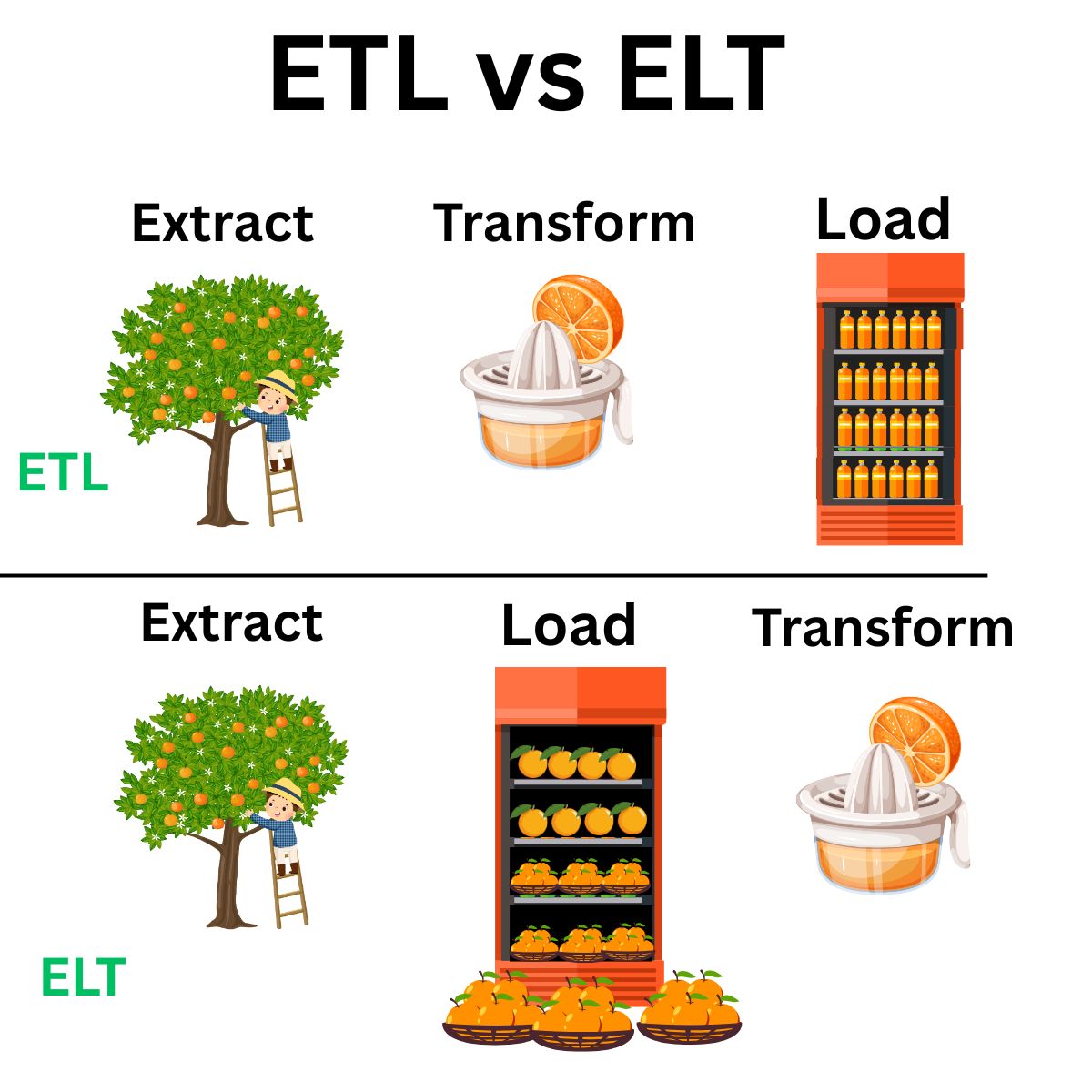

ETL: Extract Transform Load

ETL is a traditional data integration approach used primarily with RDBMS technologies such as MySQL, SQL Server, and Oracle.

Workflow

- Extract data from source systems.

- Transform it into the required format.

- Load it into the target system (e.g., a data warehouse).

ETL Tools

- SSIS / SSDT – SQL Server Integration Services / Data Tools

- Pentaho Kettle – Open-source ETL platform

- Talend – Data integration and transformation platform

- Benetl – Lightweight ETL for MySQL and PostgreSQL

ETL tools are well-suited for batch processing and structured environments but may struggle with scale and unstructured data.

src 1

src 2

ELT: Extract Load Transform

ELT is the modern, Big Data-friendly approach. Instead of transforming data before loading, ELT prioritizes loading raw data first and transforming later.

Benefits

- Immediate ingestion of all types of data (structured or unstructured)

- Flexible transformation logic, applied post-load

- Faster load times and higher throughput

- Reduced operational overhead for loading processes

Challenges

- Security blind spots may arise from loading raw data upfront

- Compliance risks due to delayed transformation (HIPAA, GDPR, etc.)

- High storage costs if raw data is stored unfiltered in cloud/on-prem systems

ELT is ideal for data lakes, streaming, and cloud-native architectures.

Typical Big Data Flow

Raw Data → Cleansed Data → Data Processing → Data Warehousing → ML / BI / Analytics

- Raw Data: Initial unprocessed input (logs, JSON, CSV, APIs, sensors)

- Cleansed Data: Cleaned and standardized

- Processing: Performed through tools like Spark, DLT, or Flink

- Warehousing: Data is stored in structured formats (e.g., Delta, Parquet)

- Usage: Data is consumed by ML models, dashboards, or analysts

Each stage involves pipelines, validations, and metadata tracking.

#etl #elt #pipeline #rawdata #datalake

1: Leanmsbitutorial.com

2: https://towardsdatascience.com/how-i-redesigned-over-100-etl-into-elt-data-pipelines-c58d3a3cb3c

[Avg. reading time: 9 minutes]

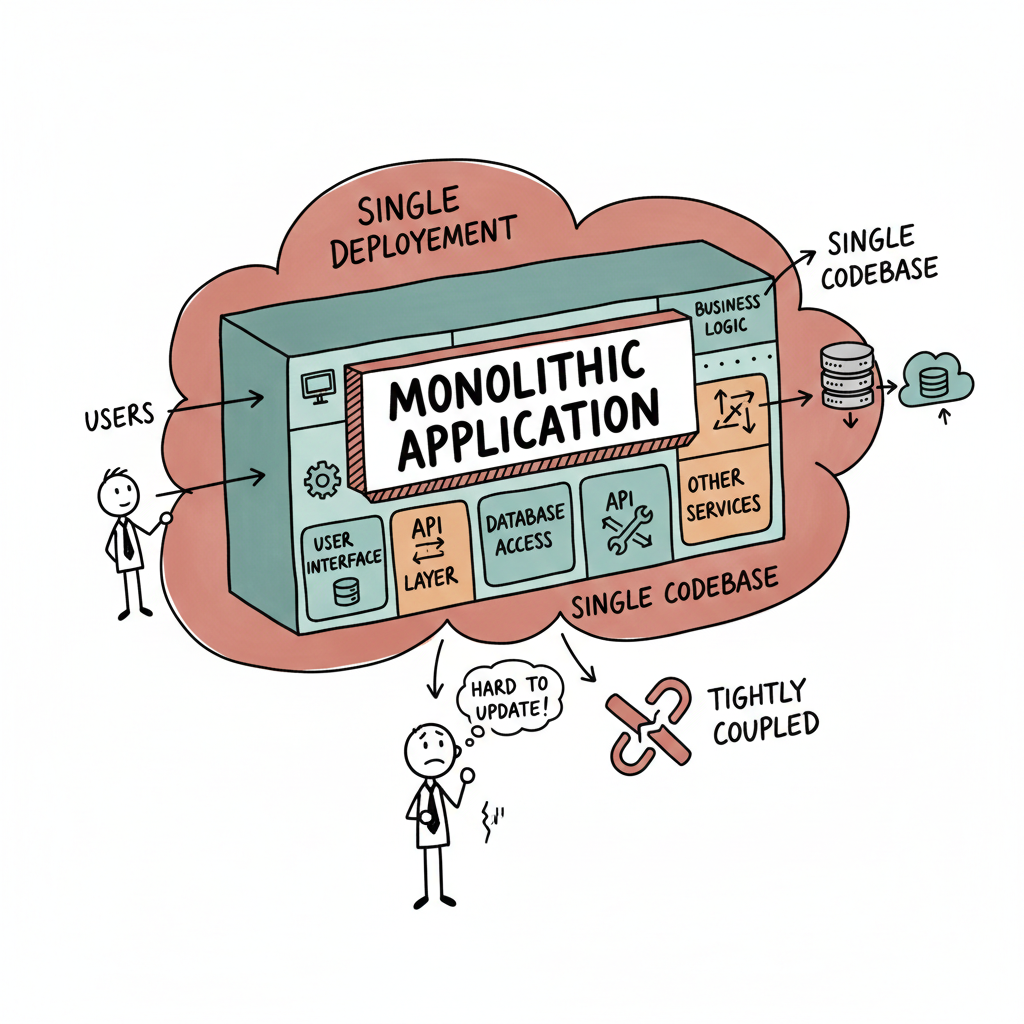

Scaling & Distributed Systems

Scalability is a critical factor in Big Data and cloud computing. As workloads grow, systems must adapt.

There are two main ways to scale infrastructure:

vertical scaling and horizontal scaling. These often relate to how distributed systems are designed and deployed.

Vertical Scaling (Scaling Up)

Vertical scaling means increasing the capacity of a single machine.

Like upgrading your personal computer — adding more RAM, a faster CPU, or a bigger hard drive.

Pros:

- Simple to implement

- No code or architecture changes needed

- Good for monolithic or legacy applications

Cons:

- Hardware has physical limits

- Downtime may be required during upgrades

- More expensive hardware = diminishing returns

Used In:

- Traditional RDBMS

- Standalone servers

- Small-scale workloads

Horizontal Scaling (Scaling Out)

Horizontal scaling means adding more machines (nodes) to handle the load collectively.

Like hiring more team members instead of just working overtime yourself.

Pros:

- More scalable: Keep adding nodes as needed

- Fault tolerant: One machine failure doesn’t stop the system

- Supports distributed computing

Cons:

- More complex to configure and manage

- Requires load balancing, data partitioning, and synchronization

- More network overhead

Used In:

- Distributed databases (e.g., Cassandra, MongoDB)

- Big Data platforms (e.g., Hadoop, Spark)

- Cloud-native applications (e.g., Kubernetes)

Distributed Systems

A distributed system is a network of computers that work together to perform tasks. The goal is to increase performance, availability, and fault tolerance by sharing resources across machines.

Analogy:

A relay team where each runner (node) has a specific part of the race, but success depends on teamwork.

Key Features of Distributed Systems

| Feature | Description |

|---|---|

| Concurrency | Multiple components can operate at the same time independently |

| Scalability | Easily expand by adding more nodes |

| Fault Tolerance | If one node fails, others continue to operate with minimal disruption |

| Resource Sharing | Nodes share tasks, data, and workload efficiently |

| Decentralization | No single point of failure; avoids bottlenecks |

| Transparency | System hides its distributed nature from users (location, access, replication) |

Horizontal Scaling vs. Distributed Systems

| Aspect | Horizontal Scaling | Distributed System |

|---|---|---|

| Definition | Adding more machines (nodes) to handle workload | A system where multiple nodes work together as one unit |

| Goal | To increase capacity and performance by scaling out | To coordinate tasks, ensure fault tolerance, and share resources |

| Architecture | Not necessarily distributed | Always distributed |

| Coordination | May not require nodes to communicate | Requires tight coordination between nodes |

| Fault Tolerance | Depends on implementation | Built-in as a core feature |

| Example | Load-balanced web servers | Hadoop, Spark, Cassandra, Kafka |

| Storage/Processing | Each node may handle separate workloads | Nodes often share or split workloads and data |

| Use Case | Quick capacity boost (e.g., web servers) | Large-scale data processing, distributed storage |

Vertical scaling helps improve single-node power, while horizontal scaling enables distributed systems to grow flexibly. Most modern Big Data systems rely on horizontal scaling for scalability, reliability, and performance.

#scaling #vertical #horizontal #distributed

[Avg. reading time: 9 minutes]

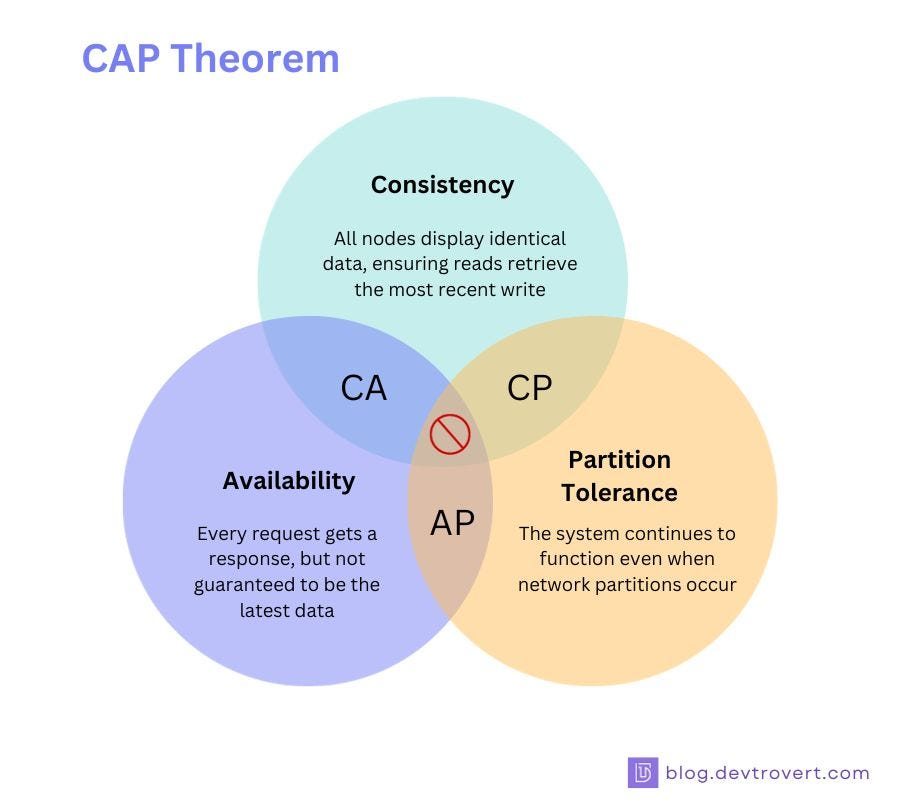

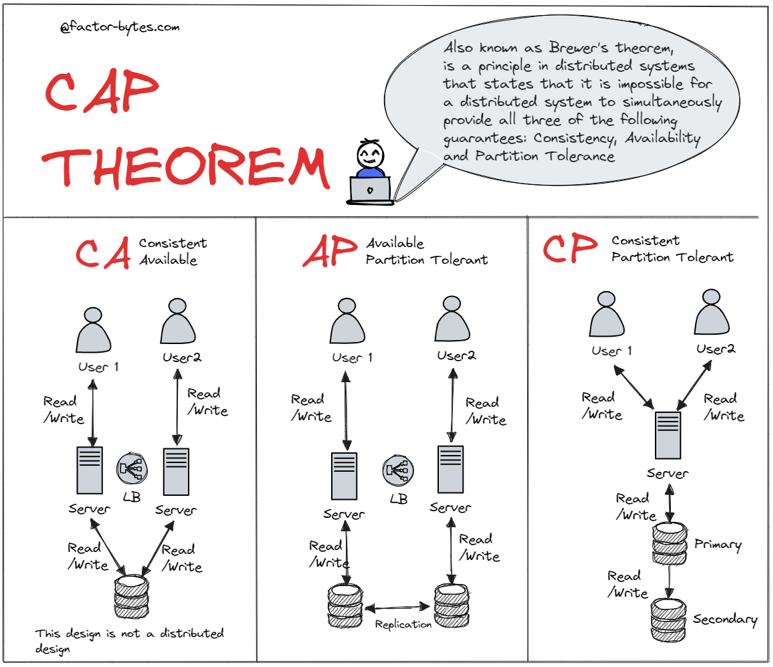

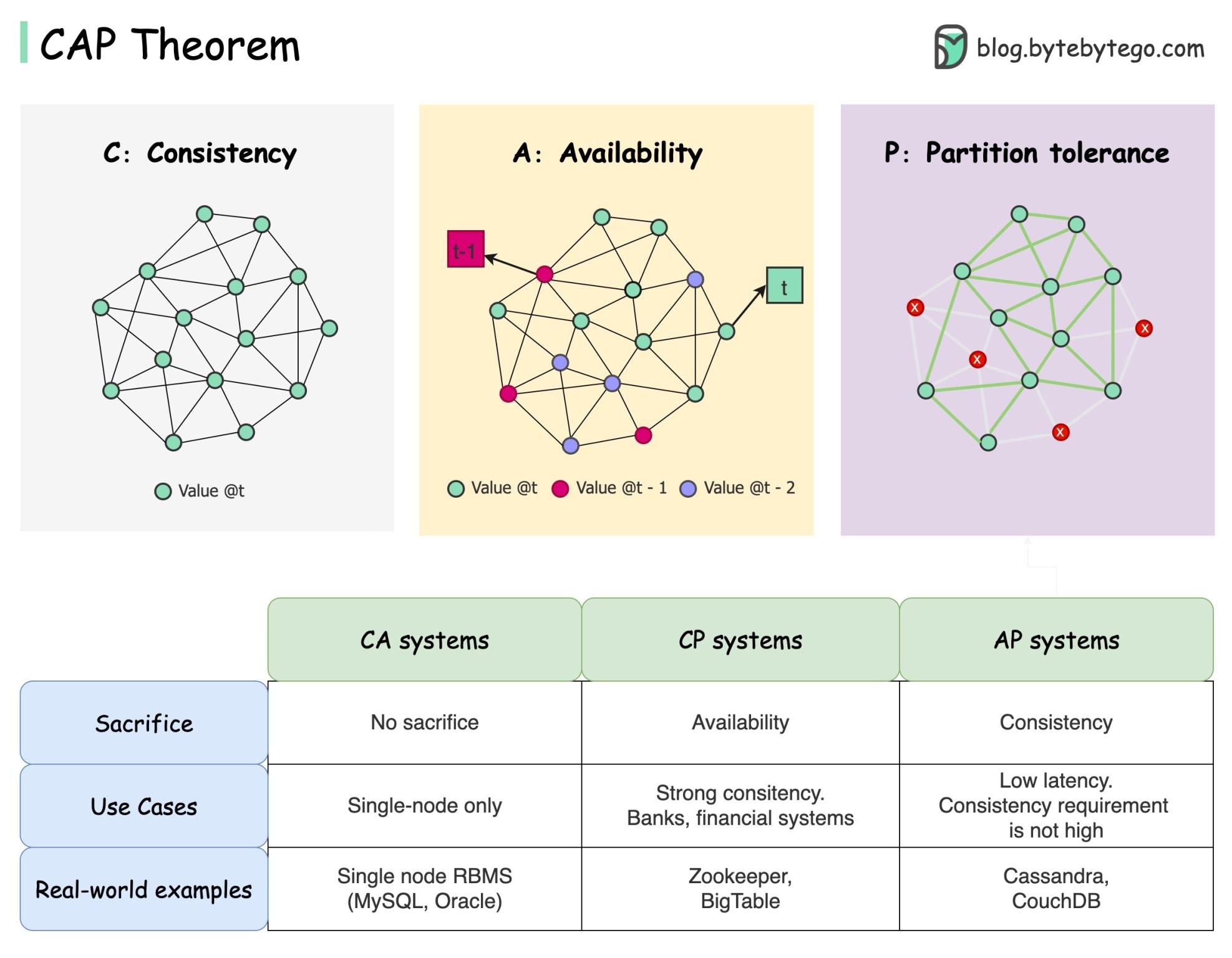

CAP Theorem

src 1

src 1

The CAP Theorem is a fundamental concept in distributed computing. It states that in the presence of a network partition, a distributed system can guarantee only two out of the following three properties:

The Three Components

-

Consistency (C)

Every read receives the most recent write or an error.

Example: If a book’s location is updated in a library system, everyone querying the catalog should see the updated location immediately. -

Availability (A)

Every request receives a (non-error) response, but not necessarily the most recent data.

Example: Like a convenience store that’s always open, even if they occasionally run out of your favorite snack. -

Partition Tolerance (P)

The system continues to function despite network failures or communication breakdowns.

Example: A distributed team in different rooms that still works, even if their intercom fails.

What the CAP Theorem Means

You can only pick two out of three:

| Guarantee Combination | Sacrificed Property | Typical Use Case |

|---|---|---|

| CP (Consistency + Partition) | Availability | Banking Systems, RDBMS |

| AP (Availability + Partition) | Consistency | DNS, Web Caches |

| CA (Consistency + Availability) | Partition Tolerance (Not realistic in distributed systems) | Only feasible in non-distributed systems |

src 2

src 2

Real-World Examples

CAP Theorem trade-offs can be seen in:

- Social Media Platforms – Favor availability and partition tolerance (AP)

- Financial Systems – Require consistency and partition tolerance (CP)

- IoT Networks – Often prioritize availability and partition tolerance (AP)

- eCommerce Platforms – Mix of AP and CP depending on the service

- Content Delivery Networks (CDNs) – Strongly AP-focused for high availability and responsiveness

src 3

src 3

graph TD

A[Consistency]

B[Availability]

C[Partition Tolerance]

A -- CP System --> C

B -- AP System --> C

A -- CA System --> B

subgraph CAP Triangle

A

B

C

end

This diagram shows that you can choose only two at a time:

- CP (Consistency + Partition Tolerance): e.g., traditional databases

- AP (Availability + Partition Tolerance): e.g., DNS, Cassandra

- CA is only theoretical in a distributed environment (it fails when partition occurs)

In distributed systems, network partitions are unavoidable. The CAP Theorem helps us choose which trade-off makes the most sense for our use case.

#cap #consistency #availability #partitiontolerant

1: blog.devtrovert.com

2: Factor-bytes.com

3: blog.bytebytego.com

[Avg. reading time: 6 minutes]

Optimistic concurrency

Optimistic Concurrency is a concurrency control strategy used in databases and distributed systems that allows multiple users or processes to access the same data simultaneously—without locking resources.

Instead of preventing conflicts upfront by using locks, it assumes that conflicts are rare. If a conflict does occur, it’s detected after the operation, and appropriate resolution steps (like retries) are taken.

How It Works

- Multiple users/processes read and attempt to write to the same data.

- Instead of using locks, each update tracks the version or timestamp of the data.

- When writing, the system checks if the data has changed since it was read.

- If no conflict, the write proceeds.

- If conflict detected, the system throws an exception or prompts a retry.

Let’s look at a simple example:

Sample inventory Table

| item_id | item_nm | stock |

|---------|---------|-------|

| 1 | Apple | 10 |

| 2 | Orange | 20 |

| 3 | Banana | 30 |

Imagine two users, UserA and UserB, trying to update the apple stock simultaneously.

User A’s update:

UPDATE inventory SET stock = stock + 5 WHERE item_id = 1;

User B’s update:

UPDATE inventory SET stock = stock - 3 WHERE item_id = 1;

- Both updates execute concurrently without locking the table.

- After both operations, system checks for version conflicts.

- If there’s no conflict, the changes are merged.

New price of Apple stock = 10 + 5 - 3 = 12

- If there was a conflicting update (e.g., both changed the same field from different base versions), one update would fail, and the user must retry the transaction.

Optimistic Concurrency Is Ideal When

| Condition | Explanation |

|---|---|

| Low write contention | Most updates happen on different parts of data |

| Read-heavy, write-light systems | Updates are infrequent or less overlapping |

| High performance is critical | Avoiding locks reduces wait times |

| Distributed systems | Locking is expensive and hard to coordinate |

[Avg. reading time: 6 minutes]

Eventual consistency

Eventual consistency is a consistency model used in distributed systems (like NoSQL databases and distributed storage) where updates to data may not be immediately visible across all nodes. However, the system guarantees that all replicas will eventually converge to the same state — given no new updates are made.

Unlike stronger models like serializability or linearizability, eventual consistency prioritizes performance and availability, especially in the face of network latency or partitioning.

Simple Example: Distributed Key-Value Store

Imagine a distributed database with three nodes: Node A, Node B, and Node C. All store the value for a key called "item_stock":

Node A: item_stock = 10

Node B: item_stock = 10

Node C: item_stock = 10

Now, a user sends an update to change item_stock to 15, and it reaches only Node A initially:

Node A: item_stock = 15

Node B: item_stock = 10

Node C: item_stock = 10

At this point, the system is temporarily inconsistent. Over time, the update propagates:

Node A: item_stock = 15

Node B: item_stock = 15

Node C: item_stock = 10

Eventually, all nodes reach the same value:

Node A: item_stock = 15

Node B: item_stock = 15

Node C: item_stock = 15

Key Characteristics

- Temporary inconsistencies are allowed

- Data will converge across replicas over time

- Reads may return stale data during convergence

- Prioritizes availability and partition tolerance over strict consistency

When to Use Eventual Consistency

Eventual consistency is ideal when:

| Situation | Why It Helps |

|---|---|

| High-throughput, low-latency systems | Avoids the overhead of strict consistency |

| Geo-distributed deployments | Tolerates network delays and partitions |

| Systems with frequent writes | Enables faster response without locking or blocking |

| Availability is more critical than accuracy | Keeps services running even during network issues |

[Avg. reading time: 6 minutes]

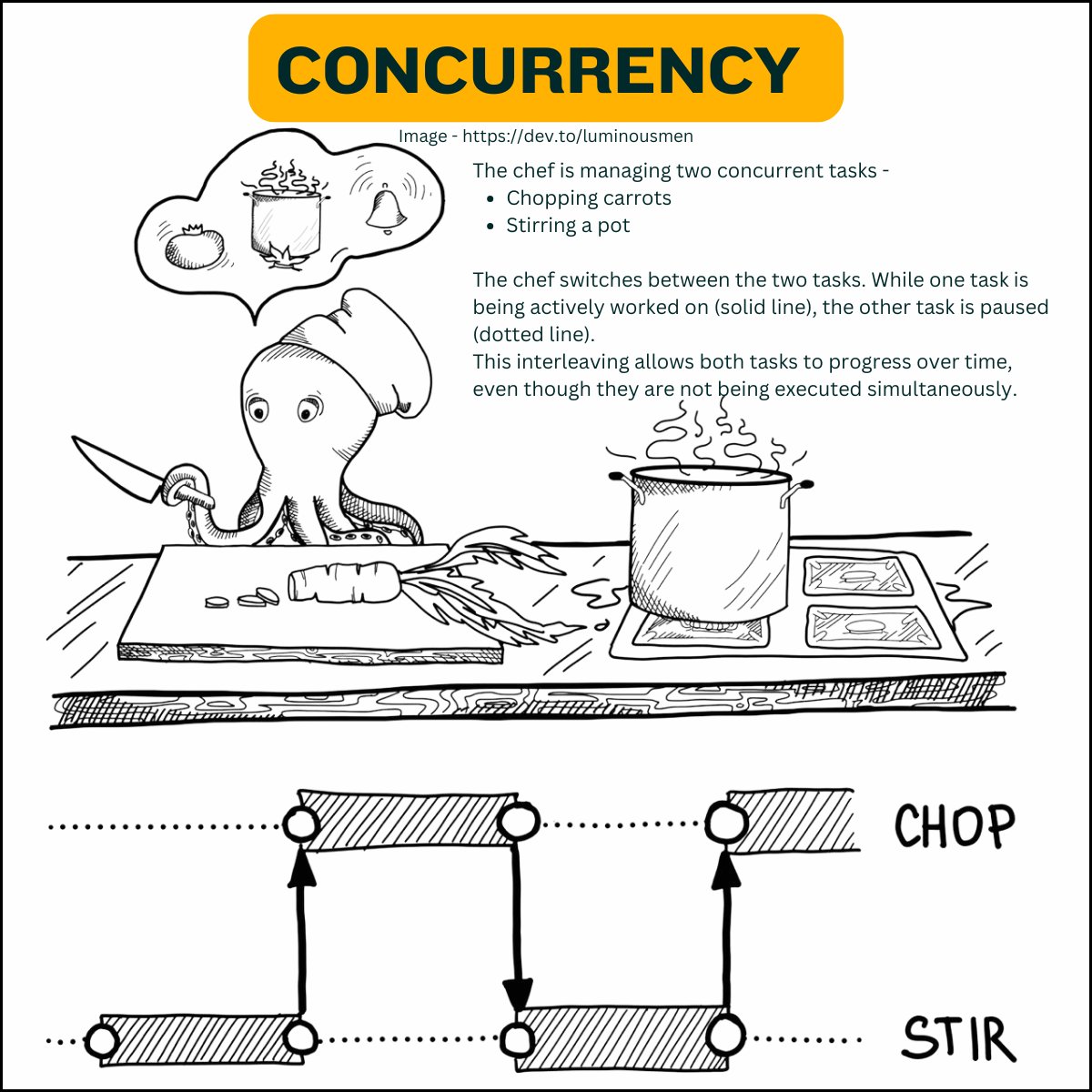

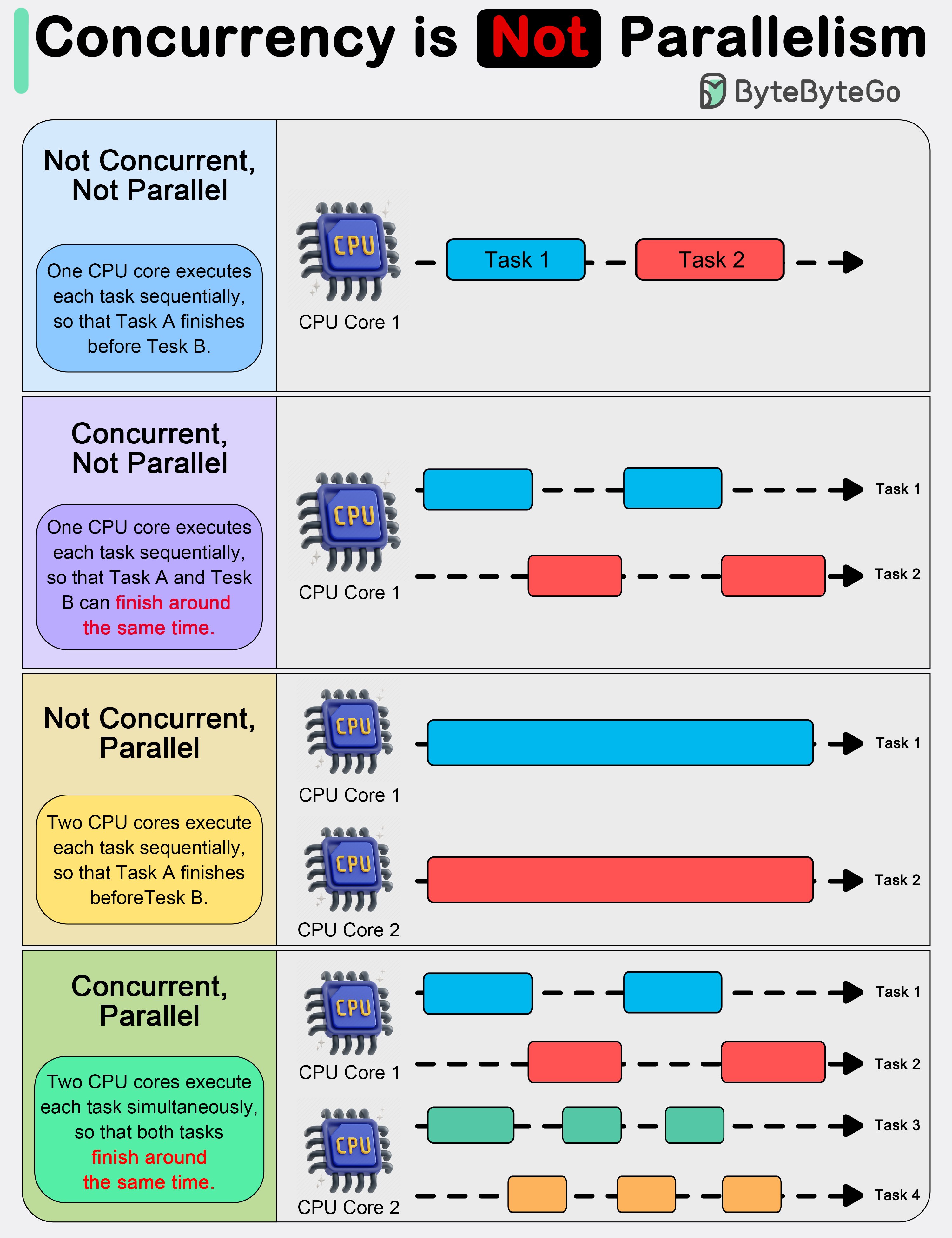

Concurrent vs. Parallel

Understanding the difference between concurrent and parallel programming is key when designing efficient, scalable applications — especially in distributed and multi-core systems.

Concurrent Programming

Concurrent programming is about managing multiple tasks at once, allowing them to make progress without necessarily executing at the same time.

- Tasks overlap in time.

- Focuses on task coordination, not simultaneous execution.

- Often used in systems that need to handle many events or users, like web servers or GUIs.

Key Traits

- Enables responsive programs (non-blocking)

- Utilizes a single core or limited resources efficiently

- Requires mechanisms like threads, coroutines, or async/await

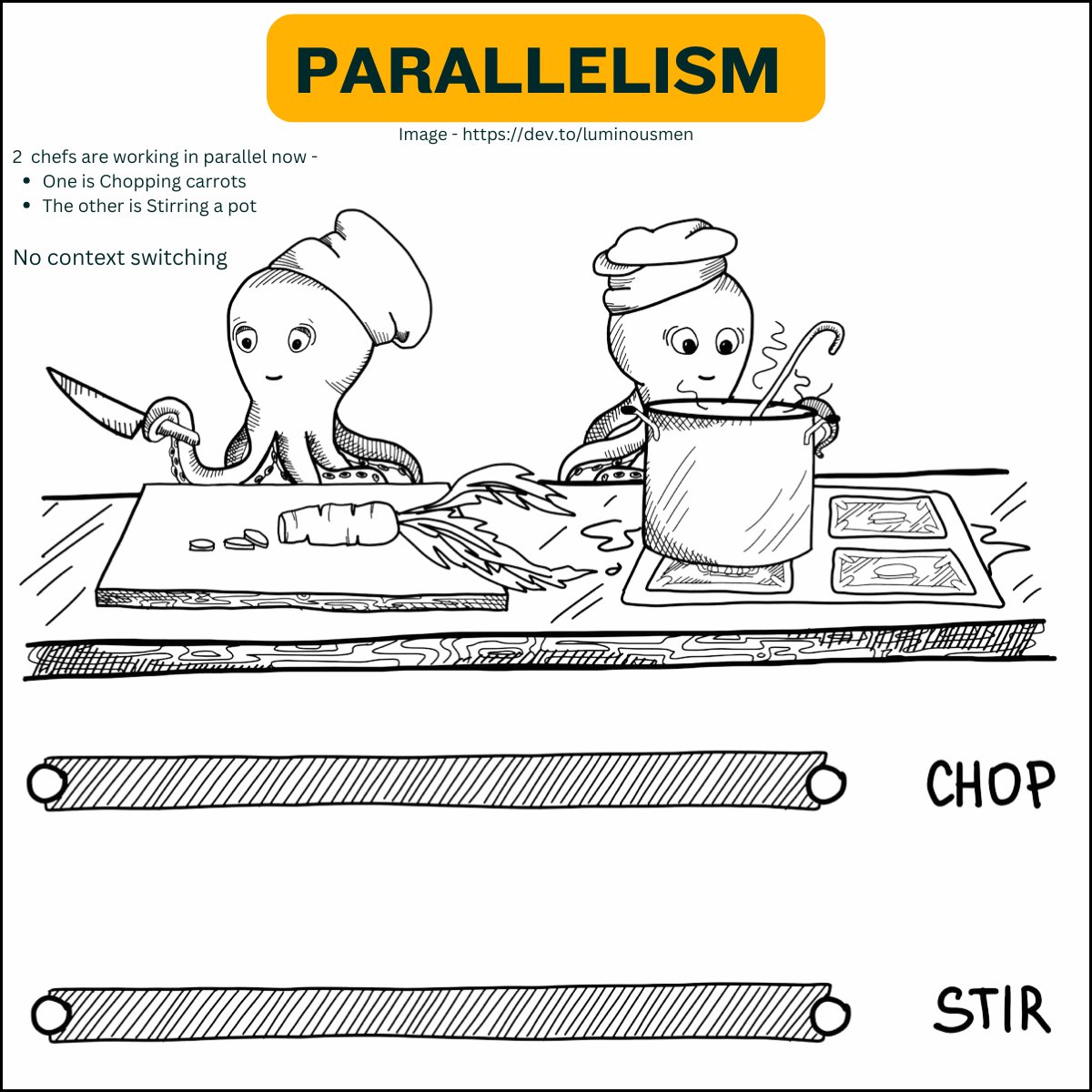

Parallel Programming

Parallel programming is about executing multiple tasks simultaneously, typically to speed up computation.

- Tasks run at the same time, often on multiple cores.

- Focuses on performance and efficiency.

- Common in high-performance computing, such as scientific simulations or data processing.

Key Traits

- Requires multi-core CPUs or GPUs

- Ideal for data-heavy workloads

- Uses multithreading, multiprocessing, or vectorization

Analogy: Cooking in a Kitchen

Concurrent Programming

One chef is working on multiple dishes. While a pot is simmering, the chef chops vegetables for the next dish. Tasks overlap, but only one is actively running at a time.

Parallel Programming

A team of chefs in a large kitchen, each cooking a different dish at the same time. Multiple dishes are actively being cooked simultaneously, speeding up the overall process.

Summary Table

| Feature | Concurrent Programming | Parallel Programming |

|---|---|---|

| Task Timing | Tasks overlap, but not necessarily at once | Tasks run simultaneously |

| Focus | Managing multiple tasks efficiently | Improving performance through parallelism |

| Execution Context | Often single-core or logical thread | Multi-core, multi-threaded or GPU-based |

| Tools/Mechanisms | Threads, coroutines, async I/O | Threads, multiprocessing, SIMD, OpenMP |

| Example Use Case | Web servers, I/O-bound systems | Scientific computing, big data, simulations |

#concurrent #parallelprogramming

[Avg. reading time: 3 minutes]

General-Purpose Language (GPL)

What is a GPL?

A GPL is a programming language designed to write software in multiple problem domains. It is not limited to a particular application area.

Swiss Army Knife

Examples

- Python – widely used in ML, web, scripting, automation.

- Java – enterprise applications, Android, backend.

- C++ – system programming, game engines.

- Rust – performance + memory safety.

- JavaScript – web front-end & server-side with Node.js.

Use Cases

- Building web apps (backend/frontend).

- Developing AI/ML pipelines.

- Writing system software and operating systems.

- Implementing data processing frameworks (e.g., Apache Spark in Scala).

- Creating mobile and desktop applications.

Why Use GPL?

- Flexibility to work across domains.

- Rich standard libraries and ecosystems.

- Ability to combine different kinds of tasks (e.g., networking + ML).

[Avg. reading time: 4 minutes]

DSL

A DSL is a programming or specification language dedicated to a particular problem domain, a particular problem representation technique, and/or a particular solution technique.

Examples

- SQL – querying and manipulating relational databases.

- HTML – for structuring content on the web.

- R – statistical computing and graphics.

- Makefiles – for building projects.

- Regular Expressions – for pattern matching.

- Markdown (READ.md or https://stackedit.io/app#)

- Mermaid - Mermaid (https://mermaid.live/)

Use Cases

- Building data pipelines (e.g., dbt, Airflow DAGs).

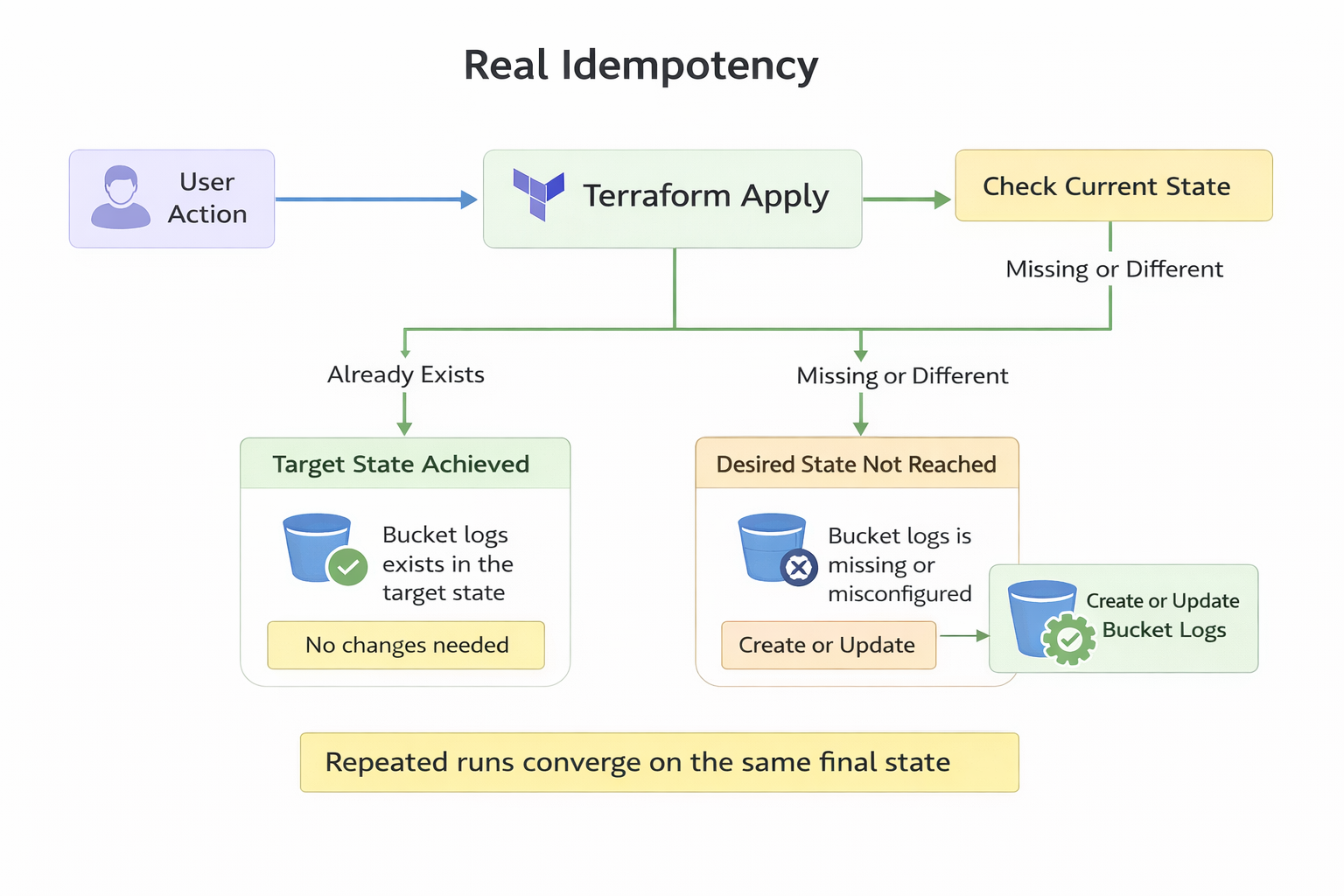

- Writing infrastructure-as-code (e.g., Terraform HCL).

- Designing UI layout (e.g., QML for Qt UI design).

- IoT rule engines (e.g., IFTTT or Node-RED flows).

- Statistical models using R.

Why Use DSL?

- Shorter, more expressive code in the domain.

- Higher-level abstractions.

- Reduced risk of bugs for domain experts.

Optional Challenge: Build Your Own DSL!

Design your own mini Domain-Specific Language (DSL)! You can keep it simple.

- Start with a specific problem.

- Create your own syntax that feels natural to all.

- Try few examples and ask your friends to try.

- Try implementing a parser using your favourite GPL.

[Avg. reading time: 4 minutes]

Popular Big Data Tools & Platforms

Big Data ecosystems rely on a wide range of tools and platforms for data processing, real-time analytics, streaming, and cloud-scale storage. Here’s a list of some widely used tools categorized by functionality:

Distributed Processing Engines

- Apache Spark – Unified analytics engine for large-scale data processing; supports batch, streaming, and ML.

- Apache Flink – Framework for stateful computations over data streams with real-time capabilities.

Real-Time Data Streaming

- Apache Kafka – Distributed event streaming platform for building real-time data pipelines and streaming apps.

Log & Monitoring Stack

- ELK Stack (Elasticsearch, Logstash, Kibana) – Searchable logging and visualization suite for real-time analytics.

Cloud-Based Platforms

- AWS (Amazon Web Services) – Scalable cloud platform offering Big Data tools like EMR, Redshift, Kinesis, and S3.

- Azure – Microsoft’s cloud platform with tools like Azure Synapse, Data Lake, and Event Hubs.

- GCP (Google Cloud Platform) – Offers BigQuery, Dataflow, Pub/Sub for large-scale data analytics.

- Databricks – Unified data platform built around Apache Spark with powerful collaboration and ML features.

- Snowflake – Cloud-native data warehouse known for performance, elasticity, and simplicity.

#bigdata #tools #cloud #kafka #spark

[Avg. reading time: 3 minutes]

NoSQL Database Types

NoSQL databases are optimized for flexibility, scalability, and performance, making them ideal for Big Data and real-time applications. They are categorized based on how they store and access data:

Key-Value Stores

Store data as simple key-value pairs. Ideal for caching, session storage, and high-speed lookups.

- Redis

- Amazon DynamoDB

Columnar Stores

Store data in columns rather than rows, optimized for analytical queries and large-scale batch processing.

- Apache HBase

- Apache Cassandra

- Amazon Redshift

Document Stores

Store semi-structured data like JSON or BSON documents. Great for flexible schemas and content management systems.

- MongoDB

- Amazon DocumentDB

Graph Databases

Use nodes and edges to represent and traverse relationships between data. Ideal for social networks, recommendation engines, and fraud detection.

- Neo4j

- Amazon Neptune

Tip: Choose the NoSQL database type based on your data access patterns and application needs.

Not all NoSQL databases solve the same problem.

#nosql #keyvalue #documentdb #graphdb #columnar

[Avg. reading time: 4 minutes]

Learning Big Data

Learning Big Data goes beyond just handling large datasets. It involves building a foundational understanding of data types, file formats, processing tools, and cloud platforms used to store, transform, and analyze data at scale.

Types of Files & Formats

- Data File Types: CSV, JSON

- File Formats: CSV, TSV, TXT, Parquet

Linux & File Management Skills

- Essential Linux Commands:

ls,cat,grep,awk,sort,cut,sed, etc. - Useful Libraries & Tools:

awk,jq,csvkit,grep– for filtering, transforming, and managing structured data

Data Manipulation Foundations

- Regular Expressions: For pattern matching and advanced string operations

- SQL / RDBMS: Understanding relational data and query languages

- NoSQL Databases: Working with document, key-value, columnar, and graph stores

Cloud Technologies

- Introduction to major platforms: AWS, Azure, GCP

- Services for data storage, compute, and analytics (e.g., S3, EMR, BigQuery)

Big Data Tools & Frameworks

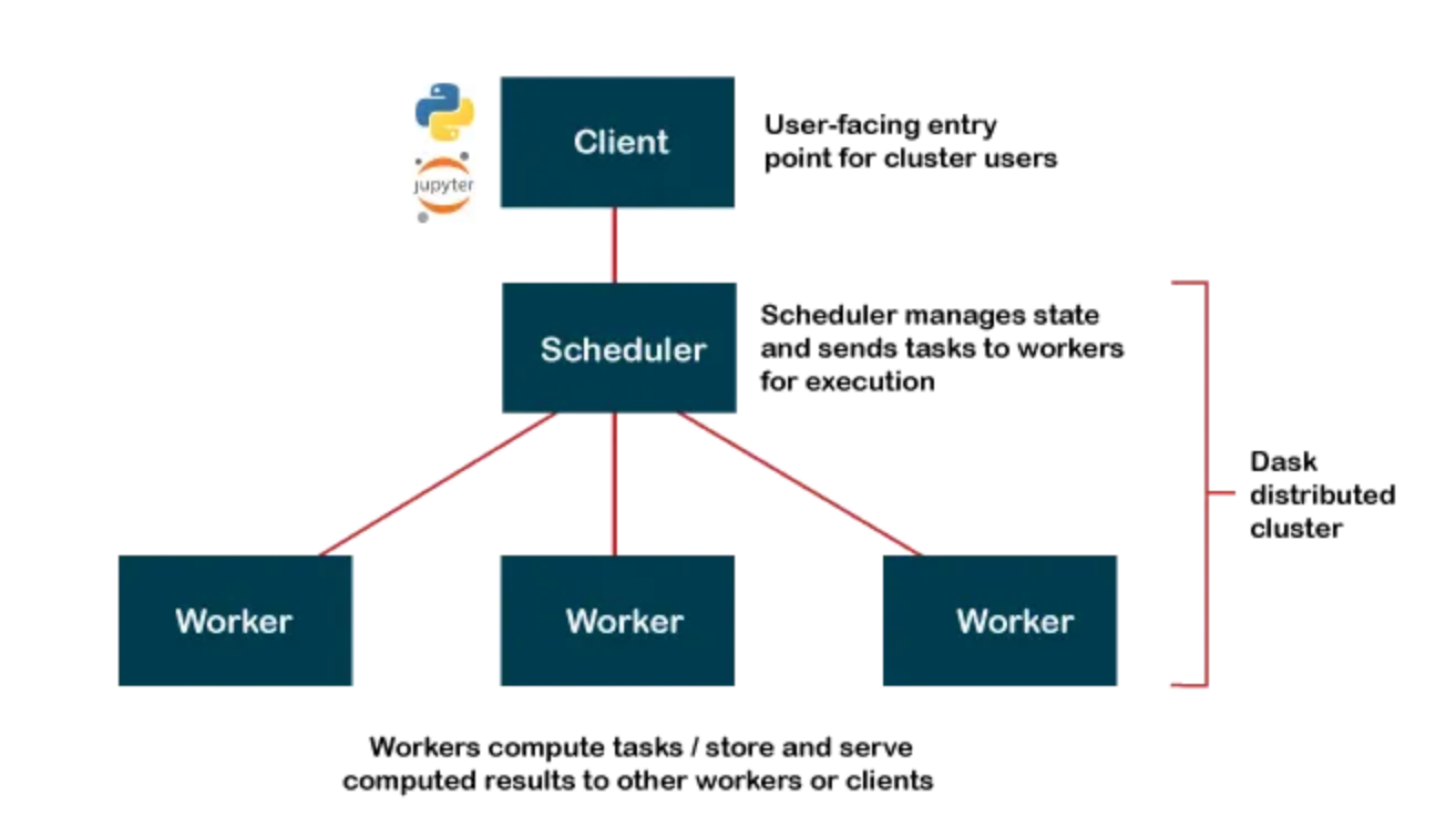

- Tools like Apache Spark, Flink, Kafka, Dask

- Workflow orchestration (e.g., Airflow, DBT, Databricks Workflows)

Miscellaneous Tools & Libraries

- Visualization:

matplotlib,seaborn,Plotly - Data Engineering:

pandas,pyarrow,sqlalchemy - Streaming & Real-time:

Kafka,Spark Streaming,Flume

Tip: Big Data learning is a multi-disciplinary journey. Start small — explore files and formats — then gradually move into tools, pipelines, cloud platforms, and real-time systems.

[Avg. reading time: 1 minute]

Developer Tools

[Avg. reading time: 5 minutes]

Introduction

Before diving into Data or ML frameworks, it's important to have a clean and reproducible development setup. A good environment makes you:

- Faster: less time fighting dependencies.

- Consistent: same results across laptops, servers, and teammates.

- Confident: tools catch errors before they become bugs.

A consistent developer experience saves hours of debugging. You spend more time solving problems, less time fixing environments.

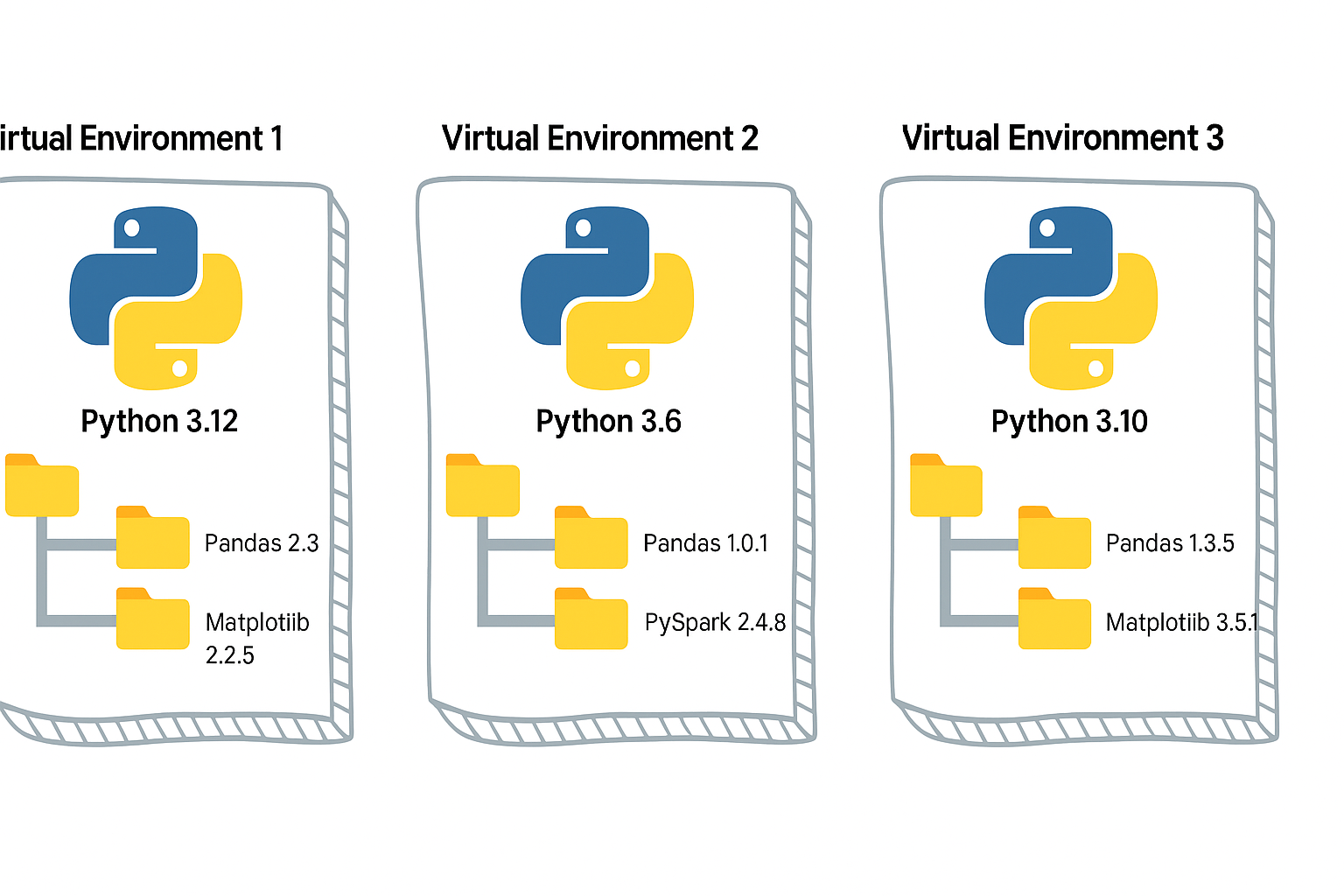

Python Virtual Environment

- A virtual environment is like a sandbox for Python.

- It isolates your project’s dependencies from the global Python installation.

- Easy to manage different versions of library.

- Must depend on requirements.txt, it has to be managed manually.

Without it, installing one package for one project may break another project.

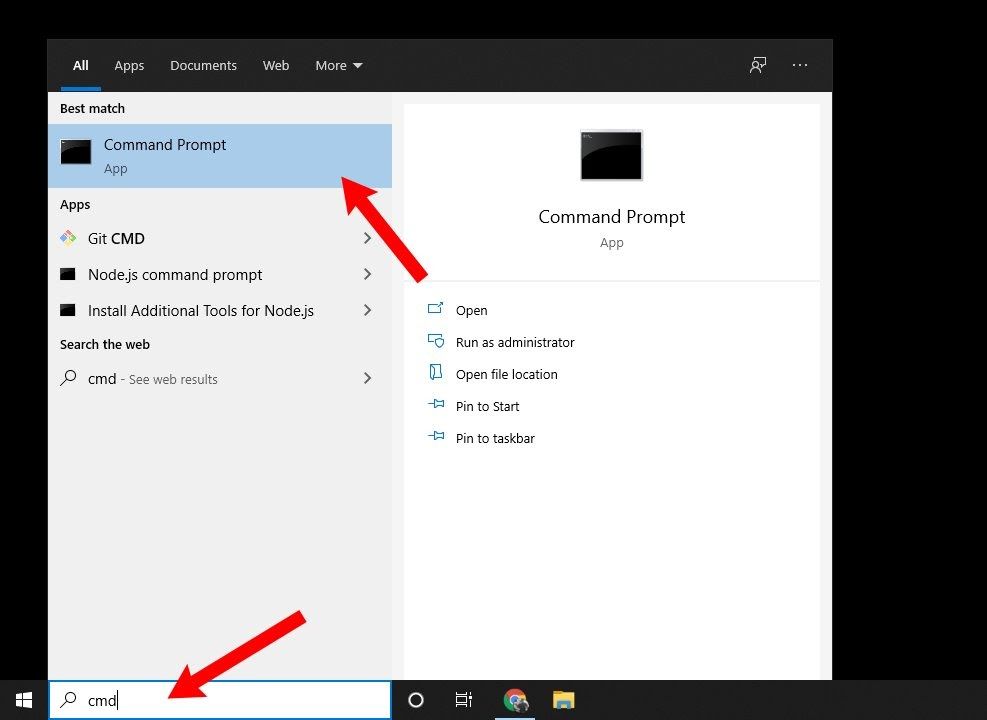

Open the CMD prompt (Windows)

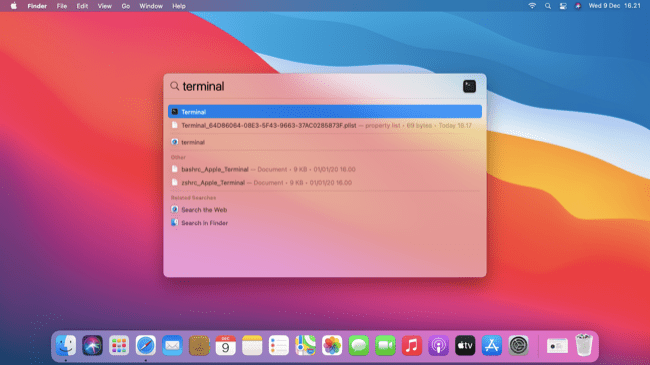

Open the Terminal (Mac)

# Step 0: Create a project folder under your Home folder.

mkdir project

cd project

# Step 1: Create a virtual environment

python -m venv myenv

# Step 2: Activate it

# On Mac/Linux:

source myenv/bin/activate

# On Windows:

myenv\Scripts\activate.bat

# Step 3: Install packages (they go inside `myenv`, not global)

pip install faker

# Step 4: Open Python

python

# Step 5: Verify

import sys

sys.prefix

sys.base_prefix

# Step 6: Run this sample

from faker import Faker

fake = Faker()

fake.name()

# Step 6: Close Python (Control + Z)

# Step 7: Deactivate the venv when done

deactivate

As a next step, you can either use Poetry or UV as your package manager.

#venv #python #uv #poetry developer_tools

[Avg. reading time: 3 minutes]

UV

Dependency & Environment Manager

- Written in Rust.

- Syntax is lightweight.

- Automatic Virtual environment creation.

Create a new project:

# Initialize a new uv project

uv init uv_helloworld

Sample layout of the directory structure

.

├── main.py

├── pyproject.toml

├── README.md

└── uv.lock

# Change directory

cd uv_helloworld

# # Create a virtual environment myproject

# uv venv myproject

# or create a UV project with specific version of Python

# uv venv myproject --python 3.11

# # Activate the Virtual environment

# source myproject/bin/activate

# # Verify the Virtual Python version

# which python3

# add library (best practice)

uv add faker

# verify the list of libraries under virtual env

uv tree

# To find the list of libraries inside Virtual env

uv pip list

edit the main.py

from faker import Faker

fake = Faker()

print(fake.name())

uv run main.py

Read More on the differences between UV and Poetry

[Avg. reading time: 12 minutes]

Python Developer Tools

PEP

PEP, or Python Enhancement Proposal, is the official style guide for Python code. It provides conventions and recommendations for writing readable, consistent, and maintainable Python code.

- PEP 8 : Style guide for Python code (most famous).

- PEP 20 : "The Zen of Python" (guiding principles).

- PEP 484 : Type hints (basis for MyPy).

- PEP 517/518 : Build system interfaces (basis for pyproject.toml, used by Poetry/UV).

- PEP 572 : Assignment expressions (the := walrus operator).

- PEP 695 : Type parameter syntax for generics (Python 3.12).

Key Aspects of PEP 8 (Popular ones)

Indentation

- Use 4 spaces per indentation level

- Continuation lines should align with opening delimiter or be indented by 4 spaces.

Line Length

- Limit lines to a maximum of 79 characters.

- For docstrings and comments, limit lines to 72 characters.

Blank Lines

- Use 2 blank lines before top-level functions and class definitions.

- Use 1 blank line between methods inside a class.

Imports

- Imports should be on separate lines.

- Group imports into three sections: standard library, third-party libraries, and local application imports.

- Use absolute imports whenever possible.

# Correct

import os

import sys

# Wrong

import sys, os

Naming Conventions

- Use

snake_casefor function and variable names. - Use

CamelCasefor class names. - Use

UPPER_SNAKE_CASEfor constants. - Avoid single-character variable names except for counters or indices.

Whitespace

- Don’t pad inside parentheses/brackets/braces.

- Use one space around operators and after commas, but not before commas.

- No extra spaces when aligning assignments.

Comments

- Write comments that are clear, concise, and helpful.

- Use complete sentences and capitalize the first word.

- Use # for inline comments, but avoid them where the code is self-explanatory.

Docstrings

- Use triple quotes (""") for multiline docstrings.

- Describe the purpose, arguments, and return values of functions and methods.

Code Layout

- Keep function definitions and calls readable.

- Avoid writing too many nested blocks.

Consistency

- Consistency within a project outweighs strict adherence.

- If you must diverge, be internally consistent.

Linting

Linting is the process of automatically checking your Python code for:

-

Syntax errors

-

Stylistic issues (PEP 8 violations)

-

Potential bugs or bad practices

-

Keeps your code consistent and readable.

-

Helps catch errors early before runtime.

-

Encourages team-wide coding standards.

# Incorrect

import sys, os

# Correct

import os

import sys

# Bad spacing

x= 5+3

# Good spacing

x = 5 + 3

Ruff : Linter and Code Formatter

Ruff is a fast, modern tool written in Rust that helps keep your Python code:

- Consistent (follows PEP 8)

- Clean (removes unused imports, fixes spacing, etc.)

- Correct (catches potential errors)

Install

poetry add ruff

uv add ruff

Verify

ruff --version

ruff --help

example.py

import os, sys

def greet(name):

print(f"Hello, {name}")

def message(name): print(f"Hi, {name}")

def calc_sum(a, b): return a+b

greet('World')

greet('Ruff')

message('Ruff')

poetry run ruff check example.py

poetry run ruff check example.py --fix

poetry run ruff format example.py --check

poetry run ruff format example.py

OR

uv run ruff check example.py

uv run ruff check example.py --fix

uv run ruff format example.py --check

uv run ruff check example.py

MyPy : Type Checking Tool

mypy is a static type checker for Python. It checks your code against the type hints you provide, ensuring that the types are consistent throughout the codebase.

It primarily focuses on type correctness—verifying that variables, function arguments, return types, and expressions match the expected types.

Install

poetry add mypy

or

uv add mypy

or

pip install mypy

sample.py

x = 1

x = 1.0

x = True

x = "test"

x = b"test"

print(x)

def add(a: int, b: int) -> int:

return a + b

print(add(100, 123))

print(add("hello", "world"))

uv run mypy sample.py

or

poetry run mypy sample.py

or

mypy sample.py

[Avg. reading time: 11 minutes]

DUCK DB

DuckDB is a single file built with no dependencies.

All the great features can be read here https://duckdb.org/

Automatic Parallelism: DuckDB has improved its automatic parallelism capabilities, meaning it can more effectively utilize multiple CPU cores without requiring manual tuning. This results in faster query execution for large datasets.

Parquet File Improvements: DuckDB has improved its handling of Parquet files, both in terms of reading speed and support for more complex data types and compression codecs. This makes DuckDB an even better choice for working with large datasets stored in Parquet format.

Query Caching: Improves the performance of repeated queries by caching the results of previous executions. This can be a game-changer for analytics workloads with similar queries being run multiple times.

How to use DuckDB?

Download the CLI Client

-

Linux).

-

For other programming languages, visit https://duckdb.org/docs/installation/

-

Unzip the file.

-

Open Command / Terminal and run the Executable.

DuckDB in Data Engineering

Download orders.parquet from

https://github.com/duckdb/duckdb-data/releases/download/v1.0/orders.parquet

More files are available here

https://github.com/cwida/duckdb-data/releases/

Open Command Prompt or Terminal

./duckdb

# Create / Open a database

.open ordersdb

Duckdb allows you to read the contents of orders.parquet as is without needing a table. Double quotes around the file name orders.parquet is essential.

describe table "orders.parquet"

Not only this, but it also allows you to query the file as-is. (This feature is similar to one data bricks supports)

select * from "orders.parquet" limit 3;

DuckDB supports CTAS syntax and helps to create tables from the actual file.

show tables;

create table orders as select * from "orders.parquet";

select count(*) from orders;

DuckDB supports parallel query processing, and queries run fast.

This table has 1.5 million rows, and aggregation happens in less than a second.

select now(); select o_orderpriority,count(*) cnt from orders group by o_orderpriority; select now();

DuckDB also helps to convert parquet files to CSV in a snap. It also supports converting CSV to Parquet.

COPY "orders.parquet" to 'orders.csv' (FORMAT "CSV", HEADER 1);Select * from "orders.csv" limit 3;

It also supports exporting existing Tables to Parquet files.

COPY "orders" to 'neworder.parquet' (FORMAT "PARQUET");

DuckDB supports Programming languages such as Python, R, JAVA, node.js, C/C++.

DuckDB ably supports Higher-level SQL programming such as Macros, Sequences, Window Functions.

Get sample data from Yellow Cab

https://www.nyc.gov/site/tlc/about/tlc-trip-record-data.page

Copy yellow cabs data into yellowcabs folder

create table taxi_trips as select * from "yellowcabs/*.parquet";

SELECT

PULocationID,

EXTRACT(HOUR FROM tpep_pickup_datetime) AS hour_of_day,

AVG(fare_amount) AS avg_fare

FROM

taxi_trips

GROUP BY

PULocationID,

hour_of_day;

Extensions

https://duckdb.org/docs/extensions/overview

INSTALL json;

LOAD json;

select * from demo.json;

describe demo.json;

Load directly from HTTP location

select * from 'https://raw.githubusercontent.com/gchandra10/filestorage/main/sales_100.csv'

#duckdb #singlefiledatabase #parquet #tools #cli

[Avg. reading time: 8 minutes]

JQ

- jq is a lightweight and flexible command-line JSON processor.

- Reads JSON from stdin or a file, applies filters, and writes JSON to stdout.

- Useful when working with APIs, logs, or config files in JSON format.

- Handy tool in Automation.

- Download JQ CLI (Preferred) and learn JQ.

- Use the VSCode Extension and learn JQ.

Download the sample JSON

https://raw.githubusercontent.com/gchandra10/jqtutorial/refs/heads/master/sample_nows.json

Note: As this has no root element, '.' is used.

1. View JSON file in readable format

jq '.' sample_nows.json

2. Read the First JSON element / object

jq 'first(.[])' sample_nows.json

3. Read the Last JSON element

jq 'last(.[])' sample_nows.json

4. Read top 3 JSON elements

jq 'limit(3;.[])' sample_nows.json

5. Read 2nd & 3rd element. Remember, Python has the same format. LEFT Side inclusive, RIGHT Side exclusive

jq '.[2:4]' sample_nows.json

6. Extract individual values. | Pipeline the output

jq '.[] | [.balance,.age]' sample_nows.json

7. Extract individual values and do some calculations

jq '.[] | [.age, 65 - .age]' sample_nows.json

8. Return CSV from JSON

jq '.[] | [.company, .phone, .address] | @csv ' sample_nows.json

9. Return Tab Separated Values (TSV) from JSON

jq '.[] | [.company, .phone, .address] | @tsv ' sample_nows.json

10. Return with custom pipeline delimiter ( | )

jq '.[] | [.company, .phone, .address] | join("|")' sample_nows.json

Pro TIP : Export this result > output.txt and Import to db using bulk import tools like bcp, load data infile

11. Convert the number to string and return | delimited result

jq '.[] | [.balance,(.age | tostring)] | join("|") ' sample_nows.json

12. Process Array return Name (returns as list / array)

jq '.[] | [.friends[].name]' sample_nows.json

or (returns line by line)

jq '[].friends[].name' sample_nows.json

13. Parse multi level values

returns as list / array

jq '.[] | [.name.first, .name.last]' sample_nows.json

returns line by line

jq '.[].name.first, .[].name.last' sample_nows.json

14. Query values based on condition, say .index > 2

jq 'map(select(.index > 2))' sample_nows.json

jq 'map(select(.index > 2)) | .[] | [.index,.balance,.age]' sample_nows.json

15. Sorting Elements

# Sort by Age ASC

jq 'sort_by(.age)' sample_nows.json

# Sort by Age DESC

jq 'sort_by(-.age)' sample_nows.json

# Sort on multiple keys

jq 'sort_by(.age, .index)' sample_nows.json

Use Cases

curl -s https://www.githubstatus.com/api/v2/status.json

curl -s https://www.githubstatus.com/api/v2/status.json | jq '.'

curl -s https://www.githubstatus.com/api/v2/status.json | jq '.status'

#jq #tools #json #parser #cli #automation

[Avg. reading time: 0 minutes]

Dataformat

[Avg. reading time: 3 minutes]

Introduction to Data Formats

What are Data Formats?

- Data formats define how data is structured, stored, and exchanged between systems.

- In Big Data, the choice of data format is crucial because it affects:

- Storage efficiency

- Processing speed

- Interoperability

- Compression

Why are Data Formats Important in Big Data?

- Big Data often involves massive volumes of data from diverse sources.

- Choosing the right format ensures:

- Efficient data storage

- Faster querying and processing

- Easier integration with analytics frameworks like Spark, Flink, etc.

Data Formats vs. Traditional Database Storage

| Feature | Traditional RDBMS | Big Data Formats |

|---|---|---|

| Storage | Tables with rows and columns | Files/Streams with structured data |

| Schema | Fixed and enforced | Flexible, sometimes schema-on-read |

| Processing | Transactional, ACID | Batch or stream, high throughput |

| Data Model | Relational | Structured, semi-structured, binary |

| Use Cases | OLTP, Reporting | ETL, Analytics, Machine Learning |

[Avg. reading time: 3 minutes]

Common Data Formats

CSV (Comma-Separated Values)

A simple text-based format where each row is a record and columns are separated by commas.

Example:

name,age,city

Rachel,30,New York

Phoebe,25,San Francisco

Use Cases:

- Data exchange between systems

- Lightweight storage

Pros:

- Human-readable

- Easy to generate and parse

Cons:

- No support for nested or complex structures

- No schema enforcement

- Inefficient for very large data

TSV (Tab-Separated Values)

Like CSV but uses tabs instead of commas.

Example:

name age city

Rachel 30 New York

Phoebe 25 San Francisco

Use Cases:

Similar to CSV but avoids issues with commas in data

Pros:

- Easy to read and parse

- Handles data with commas

Cons:

- Same as CSV: no schema, no nested data

#bigdata #dataformat #csv #parquet #arrow

[Avg. reading time: 8 minutes]

JSON

Java Script Object Notation.

- This is neither a row-based nor Columnar Format.

- The flexible way to store & share data across systems.

- It's a text file with curly braces & key-value pairs { }

Simplest JSON format

{"id": "1","name":"Rachel"}

Properties

- Language Independent.

- Self-describing and easy to understand.

Basic Rules

- Curly braces to hold the objects.

- Data is represented in Key Value or Name Value pairs.

- Data is separated by a comma.

- The use of double quotes is necessary.

- Square brackets [ ] hold an array of data.

JSON Values

String {"name":"Rachel"}

Number {"id":101}

Boolean {"result":true, "status":false} (lowercase)

Object {

"character":{"fname":"Rachel","lname":"Green"}

}

Array {

"characters":["Rachel","Ross","Joey","Chanlder"]

}

NULL {"id":null}

Sample JSON Document

{

"characters": [

{

"id" : 1,

"fName":"Rachel",

"lName":"Green",

"status":true

},

{

"id" : 2,

"fName":"Ross",

"lName":"Geller",

"status":true

},

{

"id" : 3,

"fName":"Chandler",

"lName":"Bing",

"status":true

},

{

"id" : 4,

"fName":"Phebe",

"lName":"Buffay",

"status":false

}

]

}

JSON Best Practices

No Hyphen in your Keys.

{"first-name":"Rachel","last-name":"Green"} is not right. ✘

Under Scores Okay

{"first_name":"Rachel","last_name":"Green"} is okay ✓

Lowercase Okay

{"firstname":"Rachel","lastname":"Green"} is okay ✓

Camelcase best

{"firstName":"Rachel","lastName":"Green"} is the best. ✓

Use Cases

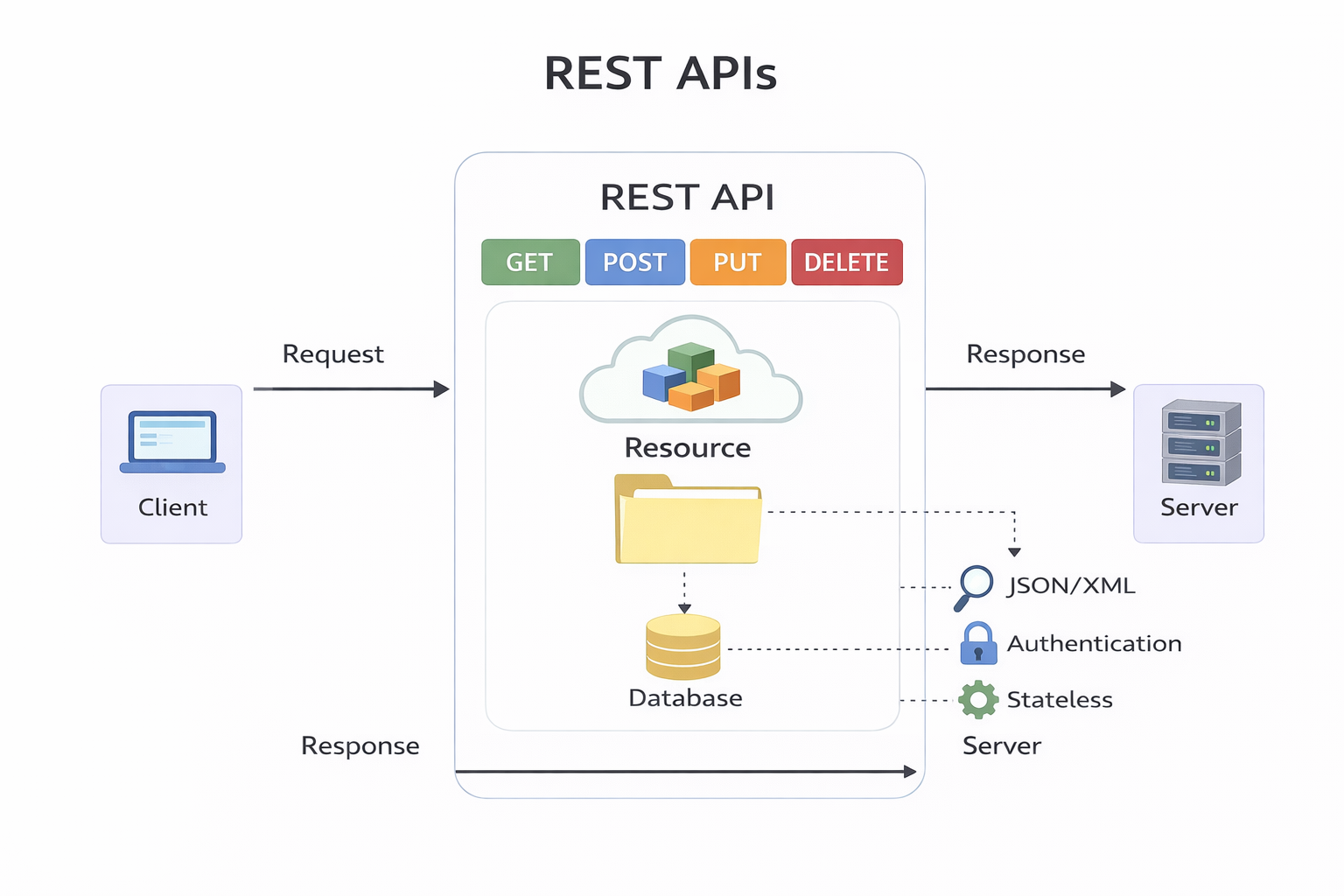

-

APIs and Web Services: JSON is widely used in RESTful APIs for sending and receiving data.

-

Configuration Files: Many modern applications and development tools use JSON for configuration.

-

Data Storage: Some NoSQL databases like MongoDB use JSON or BSON (binary JSON) for storing data.

-

Serialization and Deserialization: Converting data to/from a format that can be stored or transmitted.

Python Example

Serialize : Convert Python Object to JSON (Shareable) Format.

DeSerialize : Convert JSON (Shareable) String to Python Object.

import json

def json_serialize(file_name):

# Python dictionary with Friend's characters

friends_characters = {

"characters": [{

"name": "Rachel Green",

"job": "Fashion Executive"

}, {

"name": "Ross Geller",

"job": "Paleontologist"

}, {

"name": "Monica Geller",

"job": "Chef"

}, {

"name": "Chandler Bing",

"job": "Statistical Analysis and Data Reconfiguration"

}, {

"name": "Joey Tribbiani",

"job": "Actor"

}, {

"name": "Phoebe Buffay",

"job": "Massage Therapist"

}]

}

print(type(friends_characters), friends_characters)

print("-" * 200)

# Serializing json

json_data = json.dumps(friends_characters, indent=4)

print(type(json_data), json_data)

# Saving to a file

with open(file_name, 'w') as file:

json.dump(friends_characters, file, indent=4)

def json_deserialize(file_name):

#file_path = 'friends_characters.json'

# Open the file and read the JSON content

with open(file_name, 'r') as file:

data = json.load(file)

print(data, type(data))

def main():

file_name = 'friends_characters.json'

json_serialize(file_name)

json_deserialize(file_name)

if __name__ == "__main__":

print("Starting JSON Serialization...")

main()

print("Done!")

#bigdata #dataformat #json #hierarchical

[Avg. reading time: 20 minutes]

Parquet

Parquet is a columnar storage file format optimized for use with Apache Hadoop and related big data processing frameworks. Originally developed by Twitter and Cloudera, Parquet provides a compact and efficient way of storing large, flat datasets.

Best suited for WORM (Write Once, Read Many) workloads.

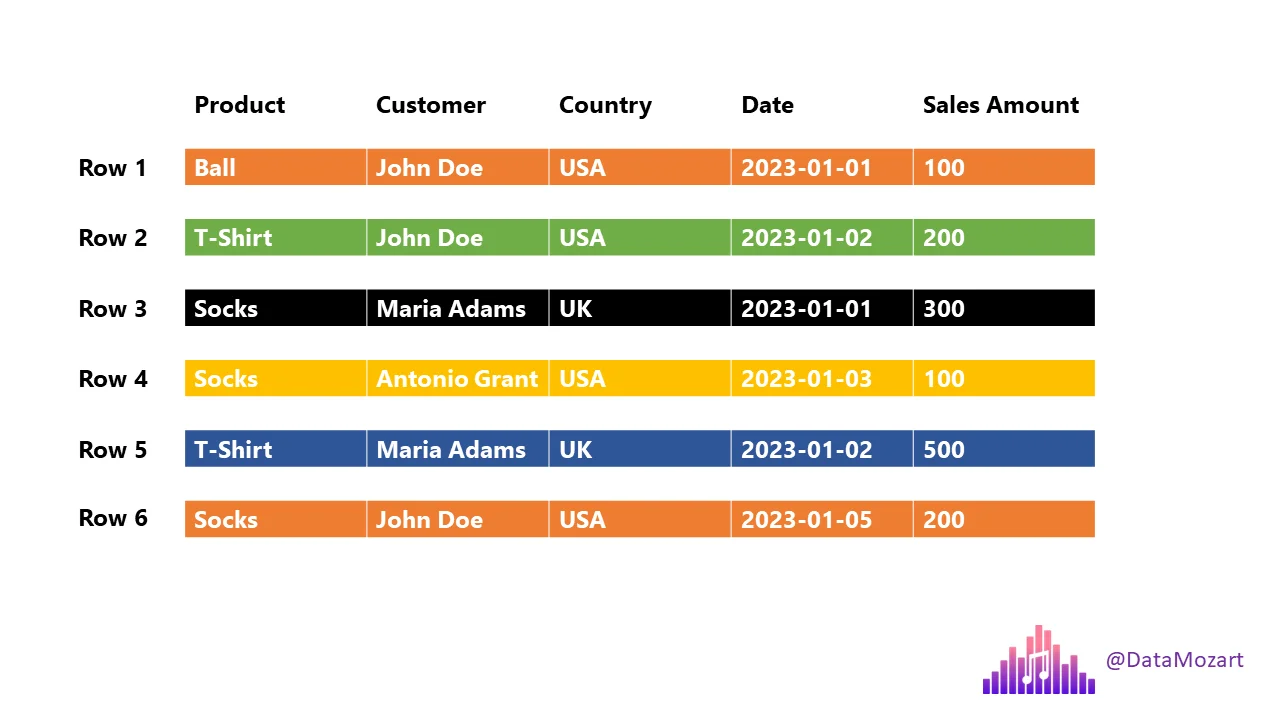

Row Storage

Give me list of Total T-Shirts sold or Customers from UK

It scans the entire dataset.

Columnar Storage

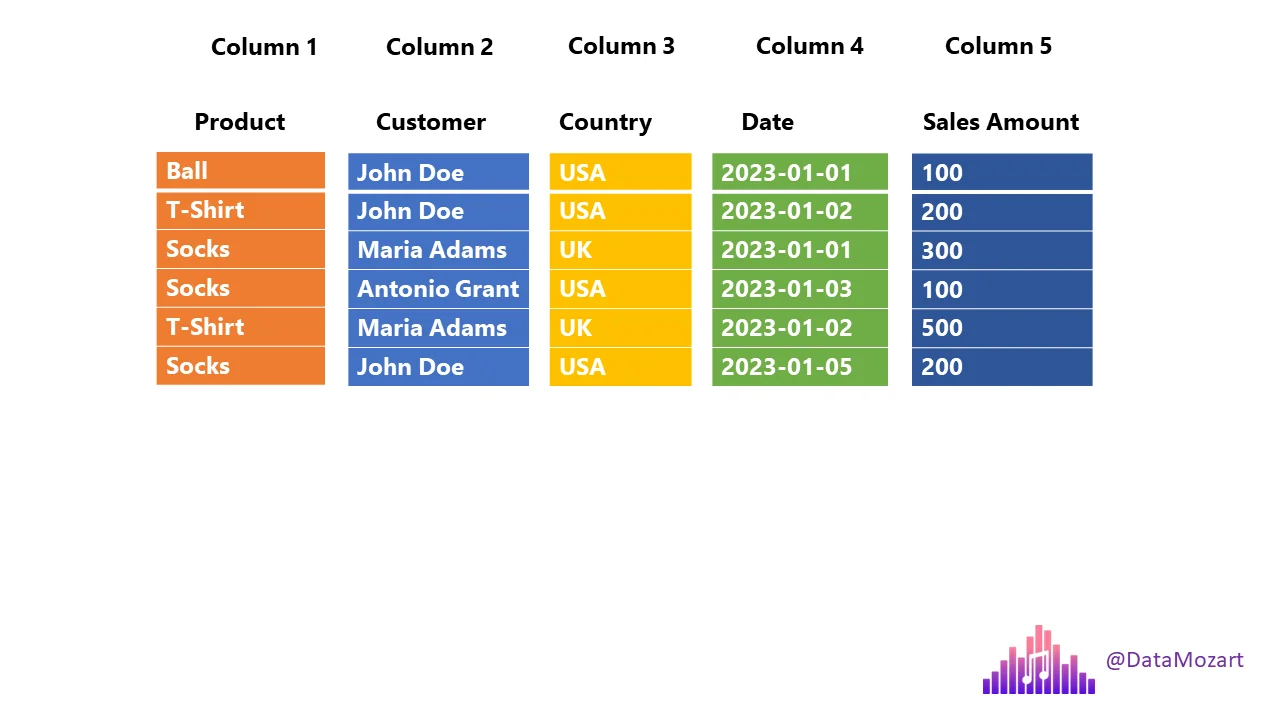

Terms to Know

Projection: Columns that are needed by the query.

select product, country, salesamount from sales;

Here the projections are: product, country & salesamount

Predicate: A filter condition that selects rows.

select product, country, salesamount from sales where country='UK';

Here predicate is where country = 'UK'

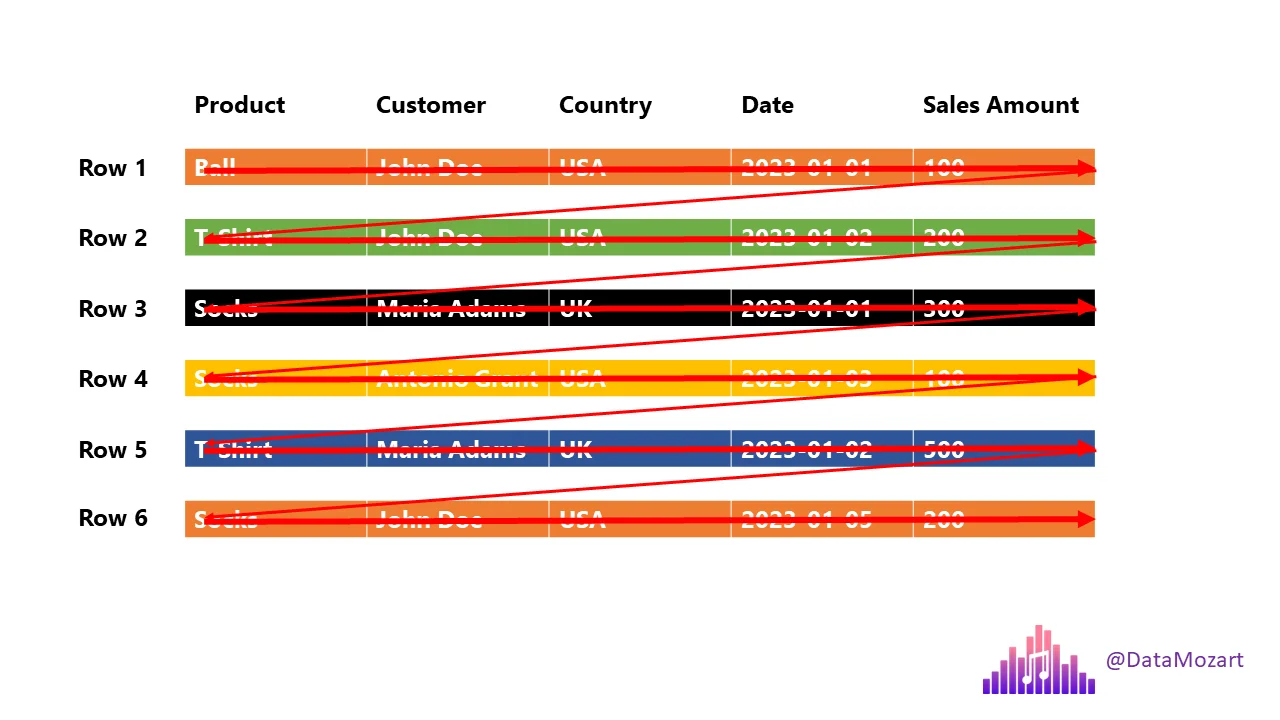

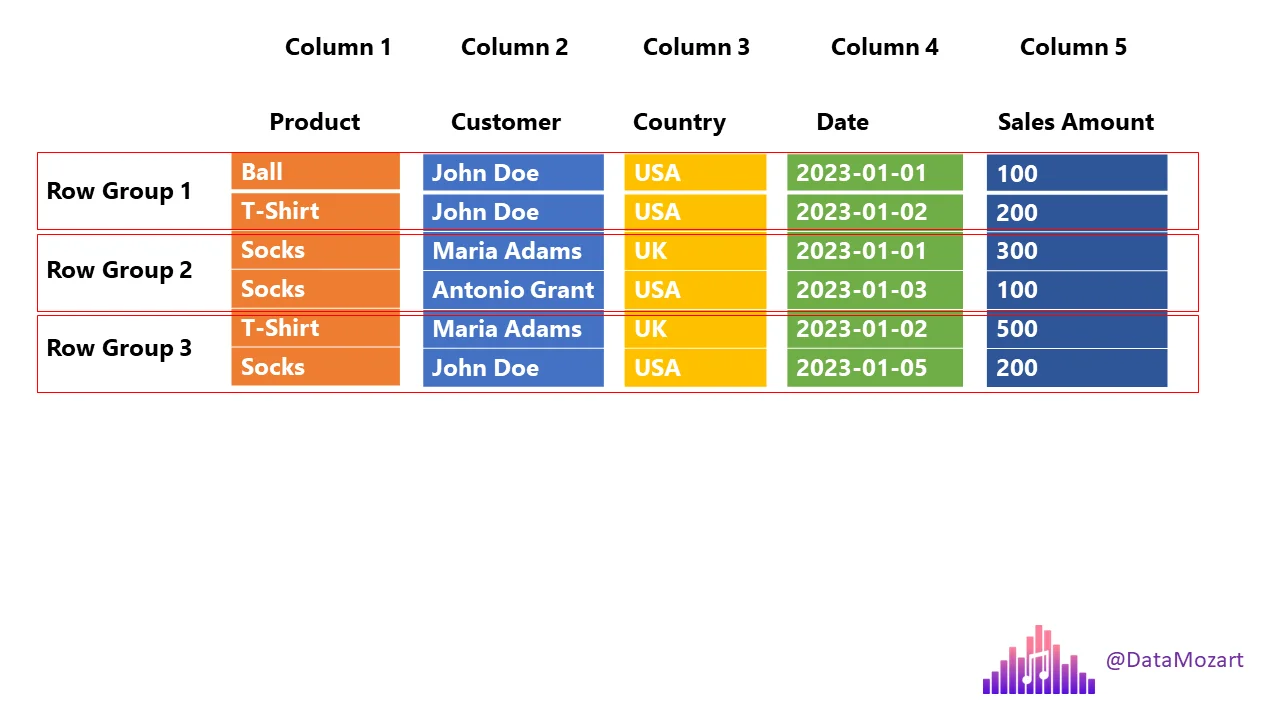

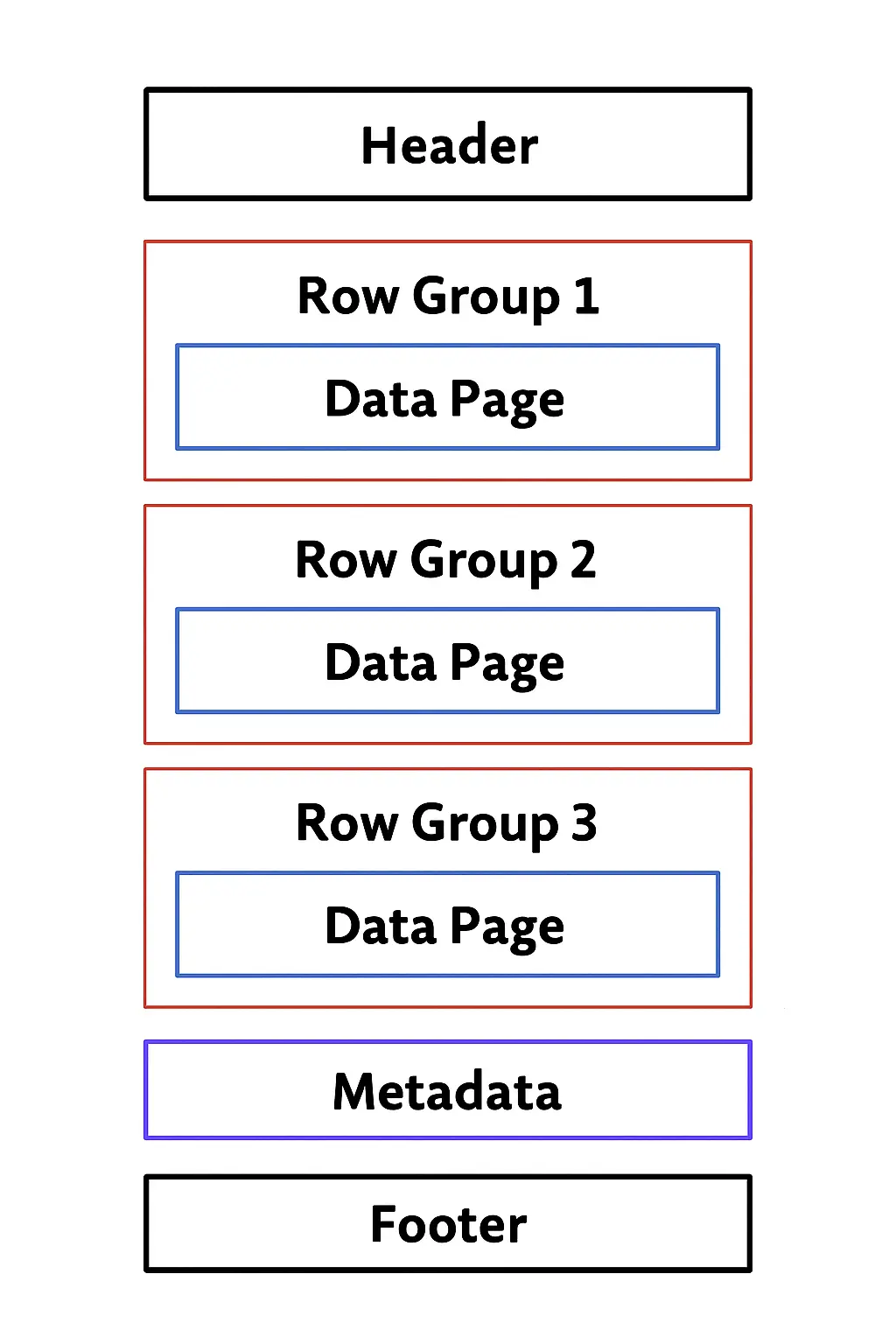

Row Groups in Parquet

-

Parquet divides data into row groups, each containing column chunks for all columns.

-

Horizontal partition—each row group can be processed independently.

-

Row groups enable parallel processing and make it possible to skip unnecessary data using metadata.

Parquet - Columnar Storage + Row Groups

Parquet File format

Parquet Fileformat Layout {{footnote: https://parquet.apache.org/docs/file-format/}}

Sample Data

| Product | Customer | Country | Date | Sales Amount |

|---|---|---|---|---|

| Ball | John Doe | USA | 2023-01-01 | 100 |

| T-Shirt | John Doe | USA | 2023-01-02 | 200 |

| Socks | Jane Doe | UK | 2023-01-03 | 150 |

| Socks | Jane Doe | UK | 2023-01-04 | 180 |

| T-Shirt | Alex | USA | 2023-01-05 | 120 |

| Socks | Alex | USA | 2023-01-06 | 220 |

Data stored inside Parquet

┌──────────────────────────────────────────────┐

│ File Header │

│ ┌────────────────────────────────────────┐ │

│ │ Magic Number: "PAR1" │ │

│ └────────────────────────────────────────┘ │

├──────────────────────────────────────────────┤

│ Row Group 1 │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Product │ │

│ │ ├─ Page 1: Ball, T-Shirt, Socks │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Customer │ │

│ │ ├─ Page 1: John Doe, John Doe, Jane Doe│ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Country │ │

│ │ ├─ Page 1: USA, USA, UK │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Date │ │

│ │ ├─ Page 1: 2023-01-01, 2023-01-02, │ │

│ │ 2023-01-03 │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Sales Amount │ │

│ │ ├─ Page 1: 100, 200, 150 │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Row Group Metadata │ │

│ │ ├─ Num Rows: 3 │ │

│ │ ├─ Min/Max per Column: │ │

│ │ • Product: Ball/T-Shirt/Socks │ │

│ │ • Customer: Jane Doe/John Doe │ │

│ │ • Country: UK/USA │ │

│ │ • Date: 2023-01-01 to 2023-01-03 │ │

│ │ • Sales Amount: 100 to 200 │ │

│ └────────────────────────────────────────┘ │

├──────────────────────────────────────────────┤

│ Row Group 2 │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Product │ │

│ │ ├─ Page 1: Socks, T-Shirt, Socks │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Customer │ │

│ │ ├─ Page 1: Jane Doe, Alex, Alex │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Country │ │

│ │ ├─ Page 1: UK, USA, USA │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Date │ │

│ │ ├─ Page 1: 2023-01-04, 2023-01-05, │ │

│ │ 2023-01-06 │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Column Chunk: Sales Amount │ │

│ │ ├─ Page 1: 180, 120, 220 │ │

│ └────────────────────────────────────────┘ │

│ ┌────────────────────────────────────────┐ │

│ │ Row Group Metadata │ │

│ │ ├─ Num Rows: 3 │ │

│ │ ├─ Min/Max per Column: │ │

│ │ • Product: Socks/T-Shirt │ │

│ │ • Customer: Alex/Jane Doe │ │

│ │ • Country: UK/USA │ │

│ │ • Date: 2023-01-04 to 2023-01-06 │ │

│ │ • Sales Amount: 120 to 220 │ │

│ └────────────────────────────────────────┘ │

├──────────────────────────────────────────────┤

│ File Metadata │

│ ┌────────────────────────────────────────┐ │

│ │ Schema: │ │

│ │ • Product: string │ │

│ │ • Customer: string │ │

│ │ • Country: string │ │

│ │ • Date: date │ │

│ │ • Sales Amount: double │ │

│ ├────────────────────────────────────────┤ │

│ │ Compression Codec: Snappy │ │

│ ├────────────────────────────────────────┤ │

│ │ Num Row Groups: 2 │ │

│ ├────────────────────────────────────────┤ │

│ │ Offsets to Row Groups │ │

│ │ • Row Group 1: offset 128 │ │

│ │ • Row Group 2: offset 1024 │ │

│ └────────────────────────────────────────┘ │

├──────────────────────────────────────────────┤

│ File Footer │

│ ┌────────────────────────────────────────┐ │

│ │ Offset to File Metadata: 2048 │ │

│ │ Magic Number: "PAR1" │ │

│ └────────────────────────────────────────┘ │

└──────────────────────────────────────────────┘

PAR1 - A 4-byte string "PAR1" indicating this is a Parquet file.

The type of compression used (e.g., Snappy).

Snappy

- Low CPU Util

- Low Compression Rate

- Splittable

- Use Case: Hot Layer

- Compute Intensive

GZip

- High CPU Util

- High Compression Rate

- Splittable

- Use Case: Cold Layer

- Storage Intensive

Encoding

Encoding is the process of converting data into a different format to:

- Save space (compression)

- Enable efficient processing

- Support interoperability between systems

Packing clothes and necessities in a luggage vs organizing them in separate sections for easier retrieval.

Plain Encoding

- Stores raw values as-is (row-by-row, then column-by-column).

- Default for columns that don’t compress well or have high cardinality (too many unique values,ex id/email). Ex: Sales Amount

Dictionary Encoding

-

Stores a dictionary of unique values and then stores references (indexes) to those values in the data pages.

-

Great for columns with repeated values.

Example:

- 0: Ball

- 1: T-Shirt

- 2: Socks

- Data Page: [0,1,2,2,1,2]

Reduces storage for repetitive values like "Socks".

Run-Length Encoding (RLE)

-

Compresses consecutive repeated values into a count + value pair.

-

Ideal when the data is sorted or has runs of the same value.

Example:

If Country column was sorted: [USA, USA, USA, UK, UK, UK]

RLE: [(3, USA), (3, UK)]

- Efficient storage for sorted or grouped data.

Delta Encoding

-

Stores the difference between consecutive values.

-

Best for numeric columns with increasing or sorted values (like dates).

Example:

Date column: [2023-01-01, 2023-01-02, 2023-01-03, ...]

Delta Encoding: [2023-01-01, +1, +1, +1, ...]

- Very compact for sequential data.

Bit Packing

-

Packs small integers using only the bits needed rather than a full byte.

-

Often used with dictionary-encoded indexes.

Example:

Dictionary indexes for Product: [0,1,2,2,1,2]

Needs only 2 bits to represent values (00, 01, 10).

Saves space vs. storing full integers.

Key Features of Parquet

Columnar Storage

Schema Evolution

- Supports complex nested data structures (arrays, maps, structs).

- Allows the schema to evolve over time, making it highly flexible for changing data models.

Compression

-

Parquet allows the use of highly efficient compression algorithms like Snappy and Gzip.

-

Columnar layout improves compression by grouping similar data together—leading to significant storage savings.

Various Encodings

Language Agnostic

- Parquet is built from the ground up for cross-language compatibility.

- Official libraries exist for Java, C++, Python, and many other languages—making it easy to integrate with diverse tech stacks.

Seamless Integration

-

Designed to integrate smoothly with a wide range of big data frameworks, including:

- Apache Hadoop

- Apache Spark

- Amazon Glue/Athena

- Clickhouse

- DuckDB

- Snowflake

- and many more.

Python Example

import pandas as pd

file_path = 'https://raw.githubusercontent.com/gchandra10/filestorage/main/sales_100.csv'

# Read the CSV file

df = pd.read_csv(file_path)